mirror of

https://github.com/lancedb/lancedb.git

synced 2025-12-23 05:19:58 +00:00

docs: assorted copyedits (#1998)

This includes a handful of minor edits I made while reading the docs. In addition to a few spelling fixes, * standardize on "rerank" over "re-rank" in prose * terminate sentences with periods or colons as appropriate * replace some usage of dashes with colons, such as in "Try it yourself - <link>" All changes are surface-level. No changes to semantics or structure. --------- Co-authored-by: Will Jones <willjones127@gmail.com>

This commit is contained in:

@@ -1,8 +1,8 @@

|

||||

## Improving retriever performance

|

||||

|

||||

Try it yourself - <a href="https://colab.research.google.com/github/lancedb/lancedb/blob/main/docs/src/notebooks/lancedb_reranking.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a><br/>

|

||||

Try it yourself: <a href="https://colab.research.google.com/github/lancedb/lancedb/blob/main/docs/src/notebooks/lancedb_reranking.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a><br/>

|

||||

|

||||

VectorDBs are used as retreivers in recommender or chatbot-based systems for retrieving relevant data based on user queries. For example, retriever is a critical component of Retrieval Augmented Generation (RAG) acrhitectures. In this section, we will discuss how to improve the performance of retrievers.

|

||||

VectorDBs are used as retrievers in recommender or chatbot-based systems for retrieving relevant data based on user queries. For example, retrievers are a critical component of Retrieval Augmented Generation (RAG) acrhitectures. In this section, we will discuss how to improve the performance of retrievers.

|

||||

|

||||

There are serveral ways to improve the performance of retrievers. Some of the common techniques are:

|

||||

|

||||

@@ -19,7 +19,7 @@ Using different embedding models is something that's very specific to the use ca

|

||||

|

||||

|

||||

## The dataset

|

||||

We'll be using a QA dataset generated using a LLama2 review paper. The dataset contains 221 query, context and answer triplets. The queries and answers are generated using GPT-4 based on a given query. Full script used to generate the dataset can be found on this [repo](https://github.com/lancedb/ragged). It can be downloaded from [here](https://github.com/AyushExel/assets/blob/main/data_qa.csv)

|

||||

We'll be using a QA dataset generated using a LLama2 review paper. The dataset contains 221 query, context and answer triplets. The queries and answers are generated using GPT-4 based on a given query. Full script used to generate the dataset can be found on this [repo](https://github.com/lancedb/ragged). It can be downloaded from [here](https://github.com/AyushExel/assets/blob/main/data_qa.csv).

|

||||

|

||||

### Using different query types

|

||||

Let's setup the embeddings and the dataset first. We'll use the LanceDB's `huggingface` embeddings integration for this guide.

|

||||

@@ -45,14 +45,14 @@ table.add(df[["context"]].to_dict(orient="records"))

|

||||

queries = df["query"].tolist()

|

||||

```

|

||||

|

||||

Now that we have the dataset and embeddings table set up, here's how you can run different query types on the dataset.

|

||||

Now that we have the dataset and embeddings table set up, here's how you can run different query types on the dataset:

|

||||

|

||||

* <b> Vector Search: </b>

|

||||

|

||||

```python

|

||||

table.search(quries[0], query_type="vector").limit(5).to_pandas()

|

||||

```

|

||||

By default, LanceDB uses vector search query type for searching and it automatically converts the input query to a vector before searching when using embedding API. So, the following statement is equivalent to the above statement.

|

||||

By default, LanceDB uses vector search query type for searching and it automatically converts the input query to a vector before searching when using embedding API. So, the following statement is equivalent to the above statement:

|

||||

|

||||

```python

|

||||

table.search(quries[0]).limit(5).to_pandas()

|

||||

@@ -77,7 +77,7 @@ Now that we have the dataset and embeddings table set up, here's how you can run

|

||||

|

||||

* <b> Hybrid Search: </b>

|

||||

|

||||

Hybrid search is a combination of vector and full-text search. Here's how you can run a hybrid search query on the dataset.

|

||||

Hybrid search is a combination of vector and full-text search. Here's how you can run a hybrid search query on the dataset:

|

||||

```python

|

||||

table.search(quries[0], query_type="hybrid").limit(5).to_pandas()

|

||||

```

|

||||

@@ -87,7 +87,7 @@ Now that we have the dataset and embeddings table set up, here's how you can run

|

||||

|

||||

!!! note "Note"

|

||||

By default, it uses `LinearCombinationReranker` that combines the scores from vector and full-text search using a weighted linear combination. It is the simplest reranker implementation available in LanceDB. You can also use other rerankers like `CrossEncoderReranker` or `CohereReranker` for reranking the results.

|

||||

Learn more about rerankers [here](https://lancedb.github.io/lancedb/reranking/)

|

||||

Learn more about rerankers [here](https://lancedb.github.io/lancedb/reranking/).

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

Continuing from the previous section, we can now rerank the results using more complex rerankers.

|

||||

|

||||

Try it yourself - <a href="https://colab.research.google.com/github/lancedb/lancedb/blob/main/docs/src/notebooks/lancedb_reranking.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a><br/>

|

||||

Try it yourself: <a href="https://colab.research.google.com/github/lancedb/lancedb/blob/main/docs/src/notebooks/lancedb_reranking.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a><br/>

|

||||

|

||||

## Reranking search results

|

||||

You can rerank any search results using a reranker. The syntax for reranking is as follows:

|

||||

@@ -62,9 +62,6 @@ Let us take a look at the same datasets from the previous sections, using the sa

|

||||

| Reranked fts | 0.672 |

|

||||

| Hybrid | 0.759 |

|

||||

|

||||

### SQuAD Dataset

|

||||

|

||||

|

||||

### Uber10K sec filing Dataset

|

||||

|

||||

| Query Type | Hit-rate@5 |

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

## Finetuning the Embedding Model

|

||||

Try it yourself - <a href="https://colab.research.google.com/github/lancedb/lancedb/blob/main/docs/src/notebooks/embedding_tuner.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a><br/>

|

||||

Try it yourself: <a href="https://colab.research.google.com/github/lancedb/lancedb/blob/main/docs/src/notebooks/embedding_tuner.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a><br/>

|

||||

|

||||

Another way to improve retriever performance is to fine-tune the embedding model itself. Fine-tuning the embedding model can help in learning better representations for the documents and queries in the dataset. This can be particularly useful when the dataset is very different from the pre-trained data used to train the embedding model.

|

||||

|

||||

@@ -16,7 +16,7 @@ validation_df.to_csv("data_val.csv", index=False)

|

||||

You can use any tuning API to fine-tune embedding models. In this example, we'll utilise Llama-index as it also comes with utilities for synthetic data generation and training the model.

|

||||

|

||||

|

||||

Then parse the dataset as llama-index text nodes and generate synthetic QA pairs from each node.

|

||||

We parse the dataset as llama-index text nodes and generate synthetic QA pairs from each node:

|

||||

```python

|

||||

from llama_index.core.node_parser import SentenceSplitter

|

||||

from llama_index.readers.file import PagedCSVReader

|

||||

@@ -43,7 +43,7 @@ val_dataset = generate_qa_embedding_pairs(

|

||||

)

|

||||

```

|

||||

|

||||

Now we'll use `SentenceTransformersFinetuneEngine` engine to fine-tune the model. You can also use `sentence-transformers` or `transformers` library to fine-tune the model.

|

||||

Now we'll use `SentenceTransformersFinetuneEngine` engine to fine-tune the model. You can also use `sentence-transformers` or `transformers` library to fine-tune the model:

|

||||

|

||||

```python

|

||||

from llama_index.finetuning import SentenceTransformersFinetuneEngine

|

||||

@@ -57,7 +57,7 @@ finetune_engine = SentenceTransformersFinetuneEngine(

|

||||

finetune_engine.finetune()

|

||||

embed_model = finetune_engine.get_finetuned_model()

|

||||

```

|

||||

This saves the fine tuned embedding model in `tuned_model` folder. This al

|

||||

This saves the fine tuned embedding model in `tuned_model` folder.

|

||||

|

||||

# Evaluation results

|

||||

In order to eval the retriever, you can either use this model to ingest the data into LanceDB directly or llama-index's LanceDB integration to create a `VectorStoreIndex` and use it as a retriever.

|

||||

|

||||

@@ -3,22 +3,22 @@

|

||||

Hybrid Search is a broad (often misused) term. It can mean anything from combining multiple methods for searching, to applying ranking methods to better sort the results. In this blog, we use the definition of "hybrid search" to mean using a combination of keyword-based and vector search.

|

||||

|

||||

## The challenge of (re)ranking search results

|

||||

Once you have a group of the most relevant search results from multiple search sources, you'd likely standardize the score and rank them accordingly. This process can also be seen as another independent step - reranking.

|

||||

Once you have a group of the most relevant search results from multiple search sources, you'd likely standardize the score and rank them accordingly. This process can also be seen as another independent step: reranking.

|

||||

There are two approaches for reranking search results from multiple sources.

|

||||

|

||||

* <b>Score-based</b>: Calculate final relevance scores based on a weighted linear combination of individual search algorithm scores. Example - Weighted linear combination of semantic search & keyword-based search results.

|

||||

* <b>Score-based</b>: Calculate final relevance scores based on a weighted linear combination of individual search algorithm scores. Example: Weighted linear combination of semantic search & keyword-based search results.

|

||||

|

||||

* <b>Relevance-based</b>: Discards the existing scores and calculates the relevance of each search result - query pair. Example - Cross Encoder models

|

||||

* <b>Relevance-based</b>: Discards the existing scores and calculates the relevance of each search result-query pair. Example: Cross Encoder models

|

||||

|

||||

Even though there are many strategies for reranking search results, none works for all cases. Moreover, evaluating them itself is a challenge. Also, reranking can be dataset, application specific so it's hard to generalize.

|

||||

Even though there are many strategies for reranking search results, none works for all cases. Moreover, evaluating them itself is a challenge. Also, reranking can be dataset or application specific so it's hard to generalize.

|

||||

|

||||

### Example evaluation of hybrid search with Reranking

|

||||

|

||||

Here's some evaluation numbers from experiment comparing these re-rankers on about 800 queries. It is modified version of an evaluation script from [llama-index](https://github.com/run-llama/finetune-embedding/blob/main/evaluate.ipynb) that measures hit-rate at top-k.

|

||||

Here's some evaluation numbers from an experiment comparing these rerankers on about 800 queries. It is modified version of an evaluation script from [llama-index](https://github.com/run-llama/finetune-embedding/blob/main/evaluate.ipynb) that measures hit-rate at top-k.

|

||||

|

||||

<b> With OpenAI ada2 embedding </b>

|

||||

|

||||

Vector Search baseline - `0.64`

|

||||

Vector Search baseline: `0.64`

|

||||

|

||||

| Reranker | Top-3 | Top-5 | Top-10 |

|

||||

| --- | --- | --- | --- |

|

||||

@@ -33,7 +33,7 @@ Vector Search baseline - `0.64`

|

||||

|

||||

<b> With OpenAI embedding-v3-small </b>

|

||||

|

||||

Vector Search baseline - `0.59`

|

||||

Vector Search baseline: `0.59`

|

||||

|

||||

| Reranker | Top-3 | Top-5 | Top-10 |

|

||||

| --- | --- | --- | --- |

|

||||

|

||||

@@ -40,7 +40,7 @@ table.add(data)

|

||||

|

||||

# Create a fts index before the hybrid search

|

||||

table.create_fts_index("text")

|

||||

# hybrid search with default re-ranker

|

||||

# hybrid search with default reranker

|

||||

results = table.search("flower moon", query_type="hybrid").to_pandas()

|

||||

```

|

||||

!!! Note

|

||||

@@ -68,7 +68,7 @@ By default, LanceDB uses `RRFReranker()`, which uses reciprocal rank fusion scor

|

||||

|

||||

|

||||

## Available Rerankers

|

||||

LanceDB provides a number of re-rankers out of the box. You can use any of these re-rankers by passing them to the `rerank()` method.

|

||||

LanceDB provides a number of rerankers out of the box. You can use any of these rerankers by passing them to the `rerank()` method.

|

||||

Go to [Rerankers](../reranking/index.md) to learn more about using the available rerankers and implementing custom rerankers.

|

||||

|

||||

|

||||

|

||||

@@ -66,7 +66,7 @@ the size of the data.

|

||||

|

||||

### Embedding Functions

|

||||

|

||||

The embedding API has been completely reworked, and it now more closely resembles the Python API, including the new [embedding registry](./js/classes/embedding.EmbeddingFunctionRegistry.md)

|

||||

The embedding API has been completely reworked, and it now more closely resembles the Python API, including the new [embedding registry](./js/classes/embedding.EmbeddingFunctionRegistry.md):

|

||||

|

||||

=== "vectordb (deprecated)"

|

||||

|

||||

|

||||

@@ -207,7 +207,7 @@

|

||||

"cell_type": "markdown",

|

||||

"source": [

|

||||

"## The dataset\n",

|

||||

"The dataset we'll use is a synthetic QA dataset generated from LLama2 review paper. The paper was divided into chunks, with each chunk being a unique context. An LLM was prompted to ask questions relevant to the context for testing a retreiver.\n",

|

||||

"The dataset we'll use is a synthetic QA dataset generated from LLama2 review paper. The paper was divided into chunks, with each chunk being a unique context. An LLM was prompted to ask questions relevant to the context for testing a retriever.\n",

|

||||

"The exact code and other utility functions for this can be found in [this](https://github.com/lancedb/ragged) repo\n"

|

||||

],

|

||||

"metadata": {

|

||||

|

||||

@@ -477,7 +477,7 @@

|

||||

"source": [

|

||||

"## Vector Search\n",

|

||||

"\n",

|

||||

"avg latency - `3.48 ms ± 71.6 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)`"

|

||||

"Average latency: `3.48 ms ± 71.6 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)`"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -597,7 +597,7 @@

|

||||

"`LinearCombinationReranker(weight=0.7)` is used as the default reranker for reranking the hybrid search results if the reranker isn't specified explicitly.\n",

|

||||

"The `weight` param controls the weightage provided to vector search score. The weight of `1-weight` is applied to FTS scores when reranking.\n",

|

||||

"\n",

|

||||

"Latency - `71 ms ± 25.4 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)`"

|

||||

"Latency: `71 ms ± 25.4 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)`"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -675,9 +675,9 @@

|

||||

},

|

||||

"source": [

|

||||

"### Cohere Reranker\n",

|

||||

"This uses Cohere's Reranking API to re-rank the results. It accepts the reranking model name as a parameter. By Default it uses the english-v3 model but you can easily switch to a multi-lingual model.\n",

|

||||

"This uses Cohere's Reranking API to re-rank the results. It accepts the reranking model name as a parameter. By default it uses the english-v3 model but you can easily switch to a multi-lingual model.\n",

|

||||

"\n",

|

||||

"latency - `605 ms ± 78.1 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)`"

|

||||

"Latency: `605 ms ± 78.1 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)`"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -1165,7 +1165,7 @@

|

||||

},

|

||||

"source": [

|

||||

"### ColBERT Reranker\n",

|

||||

"Colber Reranker is powered by ColBERT model. It runs locally using the huggingface implementation.\n",

|

||||

"Colbert Reranker is powered by ColBERT model. It runs locally using the huggingface implementation.\n",

|

||||

"\n",

|

||||

"Latency - `950 ms ± 5.78 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)`\n",

|

||||

"\n",

|

||||

@@ -1489,9 +1489,9 @@

|

||||

},

|

||||

"source": [

|

||||

"### Cross Encoder Reranker\n",

|

||||

"Uses cross encoder models are rerankers. Uses sentence transformer implemntation locally\n",

|

||||

"Uses cross encoder models are rerankers. Uses sentence transformer implementation locally\n",

|

||||

"\n",

|

||||

"Latency - `1.38 s ± 64.6 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)`"

|

||||

"Latency: `1.38 s ± 64.6 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)`"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -1771,10 +1771,10 @@

|

||||

"source": [

|

||||

"### (Experimental) OpenAI Reranker\n",

|

||||

"\n",

|

||||

"This prompts chat model to rerank results which is not a dedicated reranker model. This should be treated as experimental. You might run out of token limit so set the search limits based on your token limit.\n",

|

||||

"NOTE: It is recommended to use `gpt-4-turbo-preview`, older models might lead to bad behaviour\n",

|

||||

"This prompts a chat model to rerank results and is not a dedicated reranker model. This should be treated as experimental. You might exceed the token limit so set the search limits based on your token limit.\n",

|

||||

"NOTE: It is recommended to use `gpt-4-turbo-preview` as older models might lead to bad behaviour\n",

|

||||

"\n",

|

||||

"Latency - `Can take 10s of seconds if using GPT-4 model`"

|

||||

"Latency: `Can take 10s of seconds if using GPT-4 model`"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -1817,7 +1817,7 @@

|

||||

},

|

||||

"source": [

|

||||

"## Use your custom Reranker\n",

|

||||

"Hybrid search in LanceDB is designed to be very flexible. You can easily plug in your own Re-reranking logic. To do so, you simply need to implement the base Reranker class"

|

||||

"Hybrid search in LanceDB is designed to be very flexible. You can easily plug in your own Re-reranking logic. To do so, you simply need to implement the base Reranker class:"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -1849,9 +1849,9 @@

|

||||

"source": [

|

||||

"### Custom Reranker based on CohereReranker\n",

|

||||

"\n",

|

||||

"For the sake of simplicity let's build custom reranker that just enchances the Cohere Reranker by accepting a filter query, and accept other CohereReranker params as kwags.\n",

|

||||

"For the sake of simplicity let's build a custom reranker that enhances the Cohere Reranker by accepting a filter query, and accepts other CohereReranker params as kwargs.\n",

|

||||

"\n",

|

||||

"For this toy example let's say we want to get rid of docs that represent a table of contents, appendix etc. as these are semantically close of representing costs but this isn't something we are interested in because they don't represent the specific reasons why operating costs were high. They simply represent the costs."

|

||||

"For this toy example let's say we want to get rid of docs that represent a table of contents or appendix, as these are semantically close to representing costs but don't represent the specific reasons why operating costs were high."

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -1969,7 +1969,7 @@

|

||||

"id": "b3b5464a-7252-4eab-aaac-9b0eae37496f"

|

||||

},

|

||||

"source": [

|

||||

"As you can see the document containing the Table of contetnts of spending no longer shows up"

|

||||

"As you can see, the document containing the table of contents no longer shows up."

|

||||

]

|

||||

}

|

||||

],

|

||||

|

||||

@@ -49,7 +49,7 @@

|

||||

},

|

||||

"source": [

|

||||

"## What is a retriever\n",

|

||||

"VectorDBs are used as retreivers in recommender or chatbot-based systems for retrieving relevant data based on user queries. For example, retriever is a critical component of Retrieval Augmented Generation (RAG) acrhitectures. In this section, we will discuss how to improve the performance of retrievers.\n",

|

||||

"VectorDBs are used as retrievers in recommender or chatbot-based systems for retrieving relevant data based on user queries. For example, retriever is a critical component of Retrieval Augmented Generation (RAG) acrhitectures. In this section, we will discuss how to improve the performance of retrievers.\n",

|

||||

"\n",

|

||||

"<img src=\"https://llmstack.ai/assets/images/rag-f517f1f834bdbb94a87765e0edd40ff2.png\" />\n",

|

||||

"\n",

|

||||

@@ -64,7 +64,7 @@

|

||||

"- Fine-tuning the embedding models\n",

|

||||

"- Using different embedding models\n",

|

||||

"\n",

|

||||

"Obviously, the above list is not exhaustive. There are other subtler ways that can improve retrieval performance like experimenting chunking algorithms, using different distance/similarity metrics etc. But for brevity, we'll only cover high level and more impactful techniques here.\n",

|

||||

"Obviously, the above list is not exhaustive. There are other subtler ways that can improve retrieval performance like alternative chunking algorithms, using different distance/similarity metrics, and more. For brevity, we'll only cover high level and more impactful techniques here.\n",

|

||||

"\n"

|

||||

]

|

||||

},

|

||||

@@ -77,7 +77,7 @@

|

||||

"# LanceDB\n",

|

||||

"- Multimodal DB for AI\n",

|

||||

"- Powered by an innovative & open-source in-house file format\n",

|

||||

"- 0 Setup\n",

|

||||

"- Zero setup\n",

|

||||

"- Scales up on disk storage\n",

|

||||

"- Native support for vector, full-text(BM25) and hybrid search\n",

|

||||

"\n",

|

||||

@@ -92,8 +92,8 @@

|

||||

},

|

||||

"source": [

|

||||

"## The dataset\n",

|

||||

"The dataset we'll use is a synthetic QA dataset generated from LLama2 review paper. The paper was divided into chunks, with each chunk being a unique context. An LLM was prompted to ask questions relevant to the context for testing a retreiver.\n",

|

||||

"The exact code and other utility functions for this can be found in [this](https://github.com/lancedb/ragged) repo\n"

|

||||

"The dataset we'll use is a synthetic QA dataset generated from LLama2 review paper. The paper was divided into chunks, with each chunk being a unique context. An LLM was prompted to ask questions relevant to the context for testing a retriever.\n",

|

||||

"The exact code and other utility functions for this can be found in [this](https://github.com/lancedb/ragged) repo.\n"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -594,10 +594,10 @@

|

||||

},

|

||||

"source": [

|

||||

"## Ingestion\n",

|

||||

"Let us now ingest the contexts in LanceDB\n",

|

||||

"Let us now ingest the contexts in LanceDB. The steps will be:\n",

|

||||

"\n",

|

||||

"- Create a schema (Pydantic or Pyarrow)\n",

|

||||

"- Select an embedding model from LanceDB Embedding API (Allows automatic vectorization of data)\n",

|

||||

"- Select an embedding model from LanceDB Embedding API (to allow automatic vectorization of data)\n",

|

||||

"- Ingest the contexts\n"

|

||||

]

|

||||

},

|

||||

@@ -841,7 +841,7 @@

|

||||

},

|

||||

"source": [

|

||||

"## Different Query types in LanceDB\n",

|

||||

"LanceDB allows switching query types with by setting `query_type` argument, which defaults to `vector` when using Embedding API. In this example we'll use `JinaReranker` which is one of many rerankers supported by LanceDB\n",

|

||||

"LanceDB allows switching query types with by setting `query_type` argument, which defaults to `vector` when using Embedding API. In this example we'll use `JinaReranker` which is one of many rerankers supported by LanceDB.\n",

|

||||

"\n",

|

||||

"### Vector search:\n",

|

||||

"Vector search\n",

|

||||

@@ -1446,11 +1446,11 @@

|

||||

"source": [

|

||||

"## Takeaways & Tradeoffs\n",

|

||||

"\n",

|

||||

"* **Easiest method to significantly improve accuracy** Using Hybrid search and/or rerankers can significantly improve retrieval performance without spending any additional time or effort on tuning embedding models, generators, or dissecting the dataset.\n",

|

||||

"* **Rerankers significantly improve accuracy at little cost.** Using Hybrid search and/or rerankers can significantly improve retrieval performance without spending any additional time or effort on tuning embedding models, generators, or dissecting the dataset.\n",

|

||||

"\n",

|

||||

"* **Reranking is an expensive operation.** Depending on the type of reranker you choose, they can incur significant latecy to query times. Although some API-based rerankers can be significantly faster.\n",

|

||||

"\n",

|

||||

"* When using models locally, having a warmed-up GPU environment will significantly reduce latency. This is specially useful if the application doesn't need to be strcitly realtime. The tradeoff being GPU resources."

|

||||

"* **Pre-warmed GPU environments reduce latency.** When using models locally, having a warmed-up GPU environment will significantly reduce latency. This is especially useful if the application doesn't need to be strictly realtime. Pre-warming comes at the expense of GPU resources."

|

||||

]

|

||||

},

|

||||

{

|

||||

|

||||

@@ -188,7 +188,7 @@

|

||||

"id": "4ba9ffac-c779-49e3-91a7-f1c00f3fda41",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"Creating a LanceDB table from a pandas dataframe is straightforward using `create_table`"

|

||||

"Creating a LanceDB table from a pandas dataframe is straightforward using `create_table`:"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -457,7 +457,7 @@

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"Ok so this is a vector database, so we need actual vectors.\n",

|

||||

"We'll use sentence transformers here to avoid having to deal with api keys and all that."

|

||||

"We'll use sentence transformers here to avoid having to deal with API keys."

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -465,7 +465,7 @@

|

||||

"id": "85db4ed9-8f80-4b56-9867-1381fa1c4c7d",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"Let's create a basic model using the \"all-MiniLM-L6-v2\" model and embed the quotes"

|

||||

"Let's create a basic model using the \"all-MiniLM-L6-v2\" model and embed the quotes:"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -498,7 +498,7 @@

|

||||

"source": [

|

||||

"We can then convert the vectors into a pyarrow Table and merge it to the LanceDB Table.\n",

|

||||

"\n",

|

||||

"For the merge to work successfully, we need to have an overlapping column. Here the natural choice is to use the id column"

|

||||

"For the merge to work successfully, we need to have an overlapping column. Here the natural choice is to use the id column:"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -599,7 +599,7 @@

|

||||

"id": "518da48d-6481-4c1e-8ba4-800d5e0542cf",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"And now we'll use the `LanceTable.merge` function to add the vector column into the LanceTable."

|

||||

"And now we'll use the `LanceTable.merge` function to add the vector column into the LanceTable:"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -706,7 +706,7 @@

|

||||

"id": "f590fec8-0ed0-4148-b940-c81abe7b421c",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"If we look at the schema, we see that `all-MiniLM-L6-v2` produces 384-dimensional vectors"

|

||||

"If we look at the schema, we see that `all-MiniLM-L6-v2` produces 384-dimensional vectors:"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -945,7 +945,7 @@

|

||||

"source": [

|

||||

"### Switching Models\n",

|

||||

"\n",

|

||||

"Now we'll switch to the `all-mpnet-base-v2` model and add the vectors to the restored dataset again"

|

||||

"Now we'll switch to the `all-mpnet-base-v2` model and add the vectors to the restored dataset again:"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -1018,7 +1018,7 @@

|

||||

"## Deletion\n",

|

||||

"\n",

|

||||

"What if the whole show was just Rick-isms? \n",

|

||||

"Let's delete any quote not said by Rick"

|

||||

"Let's delete any quote not said by Rick:"

|

||||

]

|

||||

},

|

||||

{

|

||||

@@ -1161,7 +1161,7 @@

|

||||

"id": "97a1cf79-b46b-40cd-ada0-54edef358627",

|

||||

"metadata": {},

|

||||

"source": [

|

||||

"We never had to explicitly manage the versioning. And we never had to create expensive and slow snapshots. LanceDB automatically tracks the full history of operations I created and supports fast rollbacks. In production this is critical for debugging issues and minimizing downtime by rolling back to a previously successful state in seconds."

|

||||

"We never had to explicitly manage the versioning. And we never had to create expensive and slow snapshots. LanceDB automatically tracks the full history of operations and supports fast rollbacks. In production this is critical for debugging issues and minimizing downtime by rolling back to a previously successful state in seconds."

|

||||

]

|

||||

}

|

||||

],

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

====================================================================

|

||||

Adaptive RAG introduces a RAG technique that combines query analysis with self-corrective RAG.

|

||||

|

||||

For Query Analysis, it uses a small classifier(LLM), to decide the query’s complexity. Query Analysis helps routing smoothly to adjust between different retrieval strategies No retrieval, Single-shot RAG or Iterative RAG.

|

||||

For Query Analysis, it uses a small classifier(LLM), to decide the query’s complexity. Query Analysis guides adjustment between different retrieval strategies: No retrieval, Single-shot RAG or Iterative RAG.

|

||||

|

||||

**[Official Paper](https://arxiv.org/pdf/2403.14403)**

|

||||

|

||||

@@ -12,9 +12,9 @@ For Query Analysis, it uses a small classifier(LLM), to decide the query’s com

|

||||

</figcaption>

|

||||

</figure>

|

||||

|

||||

**[Offical Implementation](https://github.com/starsuzi/Adaptive-RAG)**

|

||||

**[Official Implementation](https://github.com/starsuzi/Adaptive-RAG)**

|

||||

|

||||

Here’s a code snippet for query analysis

|

||||

Here’s a code snippet for query analysis:

|

||||

|

||||

```python

|

||||

from langchain_core.prompts import ChatPromptTemplate

|

||||

@@ -35,7 +35,7 @@ llm = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

|

||||

structured_llm_router = llm.with_structured_output(RouteQuery)

|

||||

```

|

||||

|

||||

For defining and querying retriever

|

||||

The following example defines and queries a retriever:

|

||||

|

||||

```python

|

||||

# add documents in LanceDB

|

||||

|

||||

@@ -11,7 +11,7 @@ FLARE, stands for Forward-Looking Active REtrieval augmented generation is a gen

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/examples/better-rag-FLAIR/main.ipynb)

|

||||

|

||||

Here’s a code snippet for using FLARE with Langchain

|

||||

Here’s a code snippet for using FLARE with Langchain:

|

||||

|

||||

```python

|

||||

from langchain.vectorstores import LanceDB

|

||||

|

||||

@@ -11,7 +11,7 @@ HyDE, stands for Hypothetical Document Embeddings is an approach used for precis

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/examples/Advance-RAG-with-HyDE/main.ipynb)

|

||||

|

||||

Here’s a code snippet for using HyDE with Langchain

|

||||

Here’s a code snippet for using HyDE with Langchain:

|

||||

|

||||

```python

|

||||

from langchain.llms import OpenAI

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

**Agentic RAG 🤖**

|

||||

====================================================================

|

||||

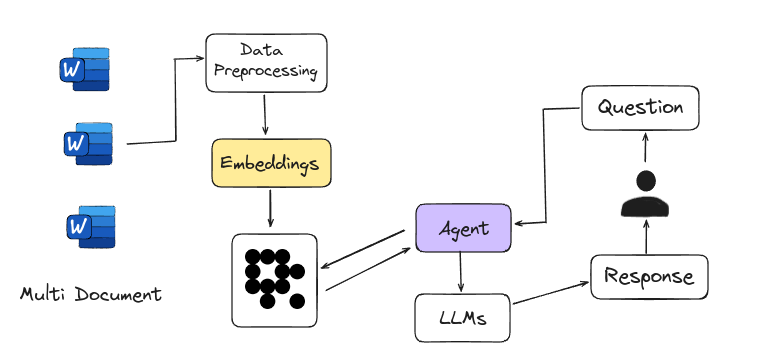

Agentic RAG is Agent-based RAG introduces an advanced framework for answering questions by using intelligent agents instead of just relying on large language models. These agents act like expert researchers, handling complex tasks such as detailed planning, multi-step reasoning, and using external tools. They navigate multiple documents, compare information, and generate accurate answers. This system is easily scalable, with each new document set managed by a sub-agent, making it a powerful tool for tackling a wide range of information needs.

|

||||

Agentic RAG introduces an advanced framework for answering questions by using intelligent agents instead of just relying on large language models. These agents act like expert researchers, handling complex tasks such as detailed planning, multi-step reasoning, and using external tools. They navigate multiple documents, compare information, and generate accurate answers. This system is easily scalable, with each new document set managed by a sub-agent, making it a powerful tool for tackling a wide range of information needs.

|

||||

|

||||

<figure markdown="span">

|

||||

|

||||

@@ -9,7 +9,7 @@ Agentic RAG is Agent-based RAG introduces an advanced framework for answering qu

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/tutorials/Agentic_RAG/main.ipynb)

|

||||

|

||||

Here’s a code snippet for defining retriever using Langchain

|

||||

Here’s a code snippet for defining retriever using Langchain:

|

||||

|

||||

```python

|

||||

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

||||

@@ -41,7 +41,7 @@ retriever = vectorstore.as_retriever()

|

||||

|

||||

```

|

||||

|

||||

Agent that formulates an improved query for better retrieval results and then grades the retrieved documents

|

||||

Here is an agent that formulates an improved query for better retrieval results and then grades the retrieved documents:

|

||||

|

||||

```python

|

||||

def grade_documents(state) -> Literal["generate", "rewrite"]:

|

||||

|

||||

@@ -4,7 +4,7 @@

|

||||

Corrective-RAG (CRAG) is a strategy for Retrieval-Augmented Generation (RAG) that includes self-reflection and self-grading of retrieved documents. Here’s a simplified breakdown of the steps involved:

|

||||

|

||||

1. **Relevance Check**: If at least one document meets the relevance threshold, the process moves forward to the generation phase.

|

||||

2. **Knowledge Refinement**: Before generating an answer, the process refines the knowledge by dividing the document into smaller segments called "knowledge strips."

|

||||

2. **Knowledge Refinement**: Before generating an answer, the process refines the knowledge by dividing the document into smaller segments called "knowledge strips".

|

||||

3. **Grading and Filtering**: Each "knowledge strip" is graded, and irrelevant ones are filtered out.

|

||||

4. **Additional Data Source**: If all documents are below the relevance threshold, or if the system is unsure about their relevance, it will seek additional information by performing a web search to supplement the retrieved data.

|

||||

|

||||

@@ -19,11 +19,11 @@ Above steps are mentioned in

|

||||

|

||||

Corrective Retrieval-Augmented Generation (CRAG) is a method that works like a **built-in fact-checker**.

|

||||

|

||||

**[Offical Implementation](https://github.com/HuskyInSalt/CRAG)**

|

||||

**[Official Implementation](https://github.com/HuskyInSalt/CRAG)**

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/tutorials/Corrective-RAG-with_Langgraph/CRAG_with_Langgraph.ipynb)

|

||||

|

||||

Here’s a code snippet for defining a table with the [Embedding API](https://lancedb.github.io/lancedb/embeddings/embedding_functions/), and retrieves the relevant documents.

|

||||

Here’s a code snippet for defining a table with the [Embedding API](https://lancedb.github.io/lancedb/embeddings/embedding_functions/), and retrieves the relevant documents:

|

||||

|

||||

```python

|

||||

import pandas as pd

|

||||

@@ -115,6 +115,6 @@ def grade_documents(state):

|

||||

}

|

||||

```

|

||||

|

||||

Check Colab for the Implementation of CRAG with Langgraph

|

||||

Check Colab for the Implementation of CRAG with Langgraph:

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/tutorials/Corrective-RAG-with_Langgraph/CRAG_with_Langgraph.ipynb)

|

||||

@@ -6,7 +6,7 @@ One of the main benefits of Graph RAG is its ability to capture and represent co

|

||||

|

||||

**[Official Paper](https://arxiv.org/pdf/2404.16130)**

|

||||

|

||||

**[Offical Implementation](https://github.com/microsoft/graphrag)**

|

||||

**[Official Implementation](https://github.com/microsoft/graphrag)**

|

||||

|

||||

[Microsoft Research Blog](https://www.microsoft.com/en-us/research/blog/graphrag-unlocking-llm-discovery-on-narrative-private-data/)

|

||||

|

||||

@@ -39,13 +39,13 @@ python3 -m graphrag.index --root dataset-dir

|

||||

|

||||

- **Execute Query**

|

||||

|

||||

Global Query Execution gives a broad overview of dataset

|

||||

Global Query Execution gives a broad overview of dataset:

|

||||

|

||||

```bash

|

||||

python3 -m graphrag.query --root dataset-dir --method global "query-question"

|

||||

```

|

||||

|

||||

Local Query Execution gives a detailed and specific answers based on the context of the entities

|

||||

Local Query Execution gives a detailed and specific answers based on the context of the entities:

|

||||

|

||||

```bash

|

||||

python3 -m graphrag.query --root dataset-dir --method local "query-question"

|

||||

|

||||

@@ -15,7 +15,7 @@ MRAG is cost-effective and energy-efficient because it avoids extra LLM queries,

|

||||

|

||||

**[Official Implementation](https://github.com/spcl/MRAG)**

|

||||

|

||||

Here’s a code snippet for defining different embedding spaces with the [Embedding API](https://lancedb.github.io/lancedb/embeddings/embedding_functions/)

|

||||

Here’s a code snippet for defining different embedding spaces with the [Embedding API](https://lancedb.github.io/lancedb/embeddings/embedding_functions/):

|

||||

|

||||

```python

|

||||

import lancedb

|

||||

@@ -44,6 +44,6 @@ class Space3(LanceModel):

|

||||

vector: Vector(model3.ndims()) = model3.VectorField()

|

||||

```

|

||||

|

||||

Create different tables using defined embedding spaces, then make queries to each embedding space. Use the resulted closest documents from each embedding space to generate answers.

|

||||

Create different tables using defined embedding spaces, then make queries to each embedding space. Use the resulting closest documents from each embedding space to generate answers.

|

||||

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

**Self RAG 🤳**

|

||||

====================================================================

|

||||

Self-RAG is a strategy for Retrieval-Augmented Generation (RAG) to get better retrieved information, generated text, and checking their own work, all without losing their flexibility. Unlike the traditional Retrieval-Augmented Generation (RAG) method, Self-RAG retrieves information as needed, can skip retrieval if not needed, and evaluates its own output while generating text. It also uses a process to pick the best output based on different preferences.

|

||||

Self-RAG is a strategy for Retrieval-Augmented Generation (RAG) to get better retrieved information, generated text, and validation, without loss of flexibility. Unlike the traditional Retrieval-Augmented Generation (RAG) method, Self-RAG retrieves information as needed, can skip retrieval if not needed, and evaluates its own output while generating text. It also uses a process to pick the best output based on different preferences.

|

||||

|

||||

**[Official Paper](https://arxiv.org/pdf/2310.11511)**

|

||||

|

||||

@@ -10,11 +10,11 @@ Self-RAG is a strategy for Retrieval-Augmented Generation (RAG) to get better re

|

||||

</figcaption>

|

||||

</figure>

|

||||

|

||||

**[Offical Implementation](https://github.com/AkariAsai/self-rag)**

|

||||

**[Official Implementation](https://github.com/AkariAsai/self-rag)**

|

||||

|

||||

Self-RAG starts by generating a response without retrieving extra info if it's not needed. For questions that need more details, it retrieves to get the necessary information.

|

||||

|

||||

Here’s a code snippet for defining retriever using Langchain

|

||||

Here’s a code snippet for defining retriever using Langchain:

|

||||

|

||||

```python

|

||||

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

||||

@@ -46,7 +46,7 @@ retriever = vectorstore.as_retriever()

|

||||

|

||||

```

|

||||

|

||||

Functions that grades the retrieved documents and if required formulates an improved query for better retrieval results

|

||||

The following functions grade the retrieved documents and formulate an improved query for better retrieval results, if required:

|

||||

|

||||

```python

|

||||

def grade_documents(state) -> Literal["generate", "rewrite"]:

|

||||

|

||||

@@ -1,8 +1,8 @@

|

||||

**SFR RAG 📑**

|

||||

====================================================================

|

||||

Salesforce AI Research introduces SFR-RAG, a 9-billion-parameter language model trained with a significant emphasis on reliable, precise, and faithful contextual generation abilities specific to real-world RAG use cases and relevant agentic tasks. They include precise factual knowledge extraction, distinguishing relevant against distracting contexts, citing appropriate sources along with answers, producing complex and multi-hop reasoning over multiple contexts, consistent format following, as well as refraining from hallucination over unanswerable queries.

|

||||

Salesforce AI Research introduced SFR-RAG, a 9-billion-parameter language model trained with a significant emphasis on reliable, precise, and faithful contextual generation abilities specific to real-world RAG use cases and relevant agentic tasks. It targets precise factual knowledge extraction, distinction between relevant and distracting contexts, citation of appropriate sources along with answers, production of complex and multi-hop reasoning over multiple contexts, consistent format following, as well as minimization of hallucination over unanswerable queries.

|

||||

|

||||

**[Offical Implementation](https://github.com/SalesforceAIResearch/SFR-RAG)**

|

||||

**[Official Implementation](https://github.com/SalesforceAIResearch/SFR-RAG)**

|

||||

|

||||

<figure markdown="span">

|

||||

|

||||

|

||||

@@ -1,7 +1,6 @@

|

||||

# AnswersDotAI Rerankers

|

||||

|

||||

This integration allows using answersdotai's rerankers to rerank the search results. [Rerankers](https://github.com/AnswerDotAI/rerankers)

|

||||

A lightweight, low-dependency, unified API to use all common reranking and cross-encoder models.

|

||||

This integration uses [AnswersDotAI's rerankers](https://github.com/AnswerDotAI/rerankers) to rerank the search results, providing a lightweight, low-dependency, unified API to use all common reranking and cross-encoder models.

|

||||

|

||||

!!! note

|

||||

Supported Query Types: Hybrid, Vector, FTS

|

||||

@@ -45,10 +44,10 @@ Accepted Arguments

|

||||

----------------

|

||||

| Argument | Type | Default | Description |

|

||||

| --- | --- | --- | --- |

|

||||

| `model_type` | `str` | `"colbert"` | The type of model to use. Supported model types can be found here - https://github.com/AnswerDotAI/rerankers |

|

||||

| `model_type` | `str` | `"colbert"` | The type of model to use. Supported model types can be found here: https://github.com/AnswerDotAI/rerankers. |

|

||||

| `model_name` | `str` | `"answerdotai/answerai-colbert-small-v1"` | The name of the reranker model to use. |

|

||||

| `column` | `str` | `"text"` | The name of the column to use as input to the cross encoder model. |

|

||||

| `return_score` | str | `"relevance"` | Options are "relevance" or "all". The type of score to return. If "relevance", will return only the `_relevance_score. If "all" is supported, will return relevance score along with the vector and/or fts scores depending on query type |

|

||||

| `return_score` | str | `"relevance"` | Options are "relevance" or "all". The type of score to return. If "relevance", will return only the `_relevance_score. If "all" is supported, will return relevance score along with the vector and/or fts scores depending on query type. |

|

||||

|

||||

|

||||

|

||||

@@ -58,17 +57,17 @@ You can specify the type of scores you want the reranker to return. The followin

|

||||

### Hybrid Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ❌ Not Supported | Returns have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ❌ Not Supported | Results have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

### Vector Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have vector(`_distance`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ✅ Supported | Results have vector(`_distance`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

### FTS Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ✅ Supported | Results have FTS(`score`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Cohere Reranker

|

||||

|

||||

This re-ranker uses the [Cohere](https://cohere.ai/) API to rerank the search results. You can use this re-ranker by passing `CohereReranker()` to the `rerank()` method. Note that you'll either need to set the `COHERE_API_KEY` environment variable or pass the `api_key` argument to use this re-ranker.

|

||||

This reranker uses the [Cohere](https://cohere.ai/) API to rerank the search results. You can use this reranker by passing `CohereReranker()` to the `rerank()` method. Note that you'll either need to set the `COHERE_API_KEY` environment variable or pass the `api_key` argument to use this reranker.

|

||||

|

||||

|

||||

!!! note

|

||||

@@ -62,17 +62,17 @@ You can specify the type of scores you want the reranker to return. The followin

|

||||

### Hybrid Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ❌ Not Supported | Returns have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column |

|

||||

| `all` | ❌ Not Supported | Results have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

|

||||

### Vector Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have vector(`_distance`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Results have vector(`_distance`) along with Hybrid Search score(`_relevance_score`) |

|

||||

|

||||

### FTS Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Results have FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# ColBERT Reranker

|

||||

|

||||

This re-ranker uses ColBERT model to rerank the search results. You can use this re-ranker by passing `ColbertReranker()` to the `rerank()` method.

|

||||

This reranker uses ColBERT model to rerank the search results. You can use this reranker by passing `ColbertReranker()` to the `rerank()` method.

|

||||

!!! note

|

||||

Supported Query Types: Hybrid, Vector, FTS

|

||||

|

||||

@@ -46,7 +46,7 @@ Accepted Arguments

|

||||

| `model_name` | `str` | `"colbert-ir/colbertv2.0"` | The name of the reranker model to use.|

|

||||

| `column` | `str` | `"text"` | The name of the column to use as input to the cross encoder model. |

|

||||

| `device` | `str` | `None` | The device to use for the cross encoder model. If None, will use "cuda" if available, otherwise "cpu". |

|

||||

| `return_score` | str | `"relevance"` | Options are "relevance" or "all". The type of score to return. If "relevance", will return only the `_relevance_score. If "all" is supported, will return relevance score along with the vector and/or fts scores depending on query type |

|

||||

| `return_score` | str | `"relevance"` | Options are "relevance" or "all". The type of score to return. If "relevance", will return only the `_relevance_score. If "all" is supported, will return relevance score along with the vector and/or fts scores depending on query type. |

|

||||

|

||||

|

||||

## Supported Scores for each query type

|

||||

@@ -55,17 +55,17 @@ You can specify the type of scores you want the reranker to return. The followin

|

||||

### Hybrid Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ❌ Not Supported | Returns have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ❌ Not Supported | Results have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

### Vector Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have vector(`_distance`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ✅ Supported | Results have vector(`_distance`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

### FTS Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ✅ Supported | Results have FTS(`score`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Cross Encoder Reranker

|

||||

|

||||

This re-ranker uses Cross Encoder models from sentence-transformers to rerank the search results. You can use this re-ranker by passing `CrossEncoderReranker()` to the `rerank()` method.

|

||||

This reranker uses Cross Encoder models from sentence-transformers to rerank the search results. You can use this reranker by passing `CrossEncoderReranker()` to the `rerank()` method.

|

||||

!!! note

|

||||

Supported Query Types: Hybrid, Vector, FTS

|

||||

|

||||

@@ -46,7 +46,7 @@ Accepted Arguments

|

||||

| `model_name` | `str` | `""cross-encoder/ms-marco-TinyBERT-L-6"` | The name of the reranker model to use.|

|

||||

| `column` | `str` | `"text"` | The name of the column to use as input to the cross encoder model. |

|

||||

| `device` | `str` | `None` | The device to use for the cross encoder model. If None, will use "cuda" if available, otherwise "cpu". |

|

||||

| `return_score` | str | `"relevance"` | Options are "relevance" or "all". The type of score to return. If "relevance", will return only the `_relevance_score. If "all" is supported, will return relevance score along with the vector and/or fts scores depending on query type |

|

||||

| `return_score` | str | `"relevance"` | Options are "relevance" or "all". The type of score to return. If "relevance", will return only the `_relevance_score. If "all" is supported, will return relevance score along with the vector and/or fts scores depending on query type. |

|

||||

|

||||

## Supported Scores for each query type

|

||||

You can specify the type of scores you want the reranker to return. The following are the supported scores for each query type:

|

||||

@@ -54,17 +54,17 @@ You can specify the type of scores you want the reranker to return. The followin

|

||||

### Hybrid Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ❌ Not Supported | Returns have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ❌ Not Supported | Results have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

### Vector Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have vector(`_distance`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ✅ Supported | Results have vector(`_distance`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

### FTS Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ✅ Supported | Results have FTS(`score`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

@@ -1,9 +1,10 @@

|

||||

## Building Custom Rerankers

|

||||

You can build your own custom reranker by subclassing the `Reranker` class and implementing the `rerank_hybrid()` method. Optionally, you can also implement the `rerank_vector()` and `rerank_fts()` methods if you want to support reranking for vector and FTS search separately.

|

||||

Here's an example of a custom reranker that combines the results of semantic and full-text search using a linear combination of the scores.

|

||||

|

||||

The `Reranker` base interface comes with a `merge_results()` method that can be used to combine the results of semantic and full-text search. This is a vanilla merging algorithm that simply concatenates the results and removes the duplicates without taking the scores into consideration. It only keeps the first copy of the row encountered. This works well in cases that don't require the scores of semantic and full-text search to combine the results. If you want to use the scores or want to support `return_score="all"`, you'll need to implement your own merging algorithm.

|

||||

|

||||

Here's an example of a custom reranker that combines the results of semantic and full-text search using a linear combination of the scores:

|

||||

|

||||

```python

|

||||

|

||||

from lancedb.rerankers import Reranker

|

||||

@@ -42,7 +43,7 @@ class MyReranker(Reranker):

|

||||

```

|

||||

|

||||

### Example of a Custom Reranker

|

||||

For the sake of simplicity let's build custom reranker that just enchances the Cohere Reranker by accepting a filter query, and accept other CohereReranker params as kwags.

|

||||

For the sake of simplicity let's build custom reranker that enhances the Cohere Reranker by accepting a filter query, and accepts other CohereReranker params as kwargs.

|

||||

|

||||

```python

|

||||

|

||||

@@ -83,6 +84,6 @@ class ModifiedCohereReranker(CohereReranker):

|

||||

```

|

||||

|

||||

!!! tip

|

||||

The `vector_results` and `fts_results` are pyarrow tables. Lean more about pyarrow tables [here](https://arrow.apache.org/docs/python). It can be convered to other data types like pandas dataframe, pydict, pylist etc.

|

||||

The `vector_results` and `fts_results` are pyarrow tables. Lean more about pyarrow tables [here](https://arrow.apache.org/docs/python). It can be converted to other data types like pandas dataframe, pydict, pylist etc.

|

||||

|

||||

For example, You can convert them to pandas dataframes using `to_pandas()` method and perform any operations you want. After you are done, you can convert the dataframe back to pyarrow table using `pa.Table.from_pandas()` method and return it.

|

||||

@@ -13,7 +13,7 @@ LanceDB comes with some built-in rerankers. Some of the rerankers that are avail

|

||||

|

||||

|

||||

## Using a Reranker

|

||||

Using rerankers is optional for vector and FTS. However, for hybrid search, rerankers are required. To use a reranker, you need to create an instance of the reranker and pass it to the `rerank` method of the query builder.

|

||||

Using rerankers is optional for vector and FTS. However, for hybrid search, rerankers are required. To use a reranker, you need to create an instance of the reranker and pass it to the `rerank` method of the query builder:

|

||||

|

||||

```python

|

||||

import lancedb

|

||||

@@ -64,7 +64,7 @@ reranked = reranker.rerank_multivector([res1, res2, res3], deduplicate=True)

|

||||

```

|

||||

|

||||

## Available Rerankers

|

||||

LanceDB comes with some built-in rerankers. Here are some of the rerankers that are available in LanceDB:

|

||||

LanceDB comes with the following built-in rerankers:

|

||||

|

||||

- [Cohere Reranker](./cohere.md)

|

||||

- [Cross Encoder Reranker](./cross_encoder.md)

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Jina Reranker

|

||||

|

||||

This re-ranker uses the [Jina](https://jina.ai/reranker/) API to rerank the search results. You can use this re-ranker by passing `JinaReranker()` to the `rerank()` method. Note that you'll either need to set the `JINA_API_KEY` environment variable or pass the `api_key` argument to use this re-ranker.

|

||||

This reranker uses the [Jina](https://jina.ai/reranker/) API to rerank the search results. You can use this reranker by passing `JinaReranker()` to the `rerank()` method. Note that you'll either need to set the `JINA_API_KEY` environment variable or pass the `api_key` argument to use this reranker.

|

||||

|

||||

|

||||

!!! note

|

||||

@@ -48,11 +48,11 @@ Accepted Arguments

|

||||

----------------

|

||||

| Argument | Type | Default | Description |

|

||||

| --- | --- | --- | --- |

|

||||

| `model_name` | `str` | `"jina-reranker-v2-base-multilingual"` | The name of the reranker model to use. You can find the list of available models in https://jina.ai/reranker/|

|

||||

| `model_name` | `str` | `"jina-reranker-v2-base-multilingual"` | The name of the reranker model to use. You can find the list of available models in https://jina.ai/reranker. |

|

||||

| `column` | `str` | `"text"` | The name of the column to use as input to the cross encoder model. |

|

||||

| `top_n` | `str` | `None` | The number of results to return. If None, will return all results. |

|

||||

| `api_key` | `str` | `None` | The API key for the Jina API. If not provided, the `JINA_API_KEY` environment variable is used. |

|

||||

| `return_score` | str | `"relevance"` | Options are "relevance" or "all". The type of score to return. If "relevance", will return only the `_relevance_score. If "all" is supported, will return relevance score along with the vector and/or fts scores depending on query type |

|

||||

| `return_score` | str | `"relevance"` | Options are "relevance" or "all". The type of score to return. If "relevance", will return only the `_relevance_score. If "all" is supported, will return relevance score along with the vector and/or fts scores depending on query type. |

|

||||

|

||||

|

||||

|

||||

@@ -62,17 +62,17 @@ You can specify the type of scores you want the reranker to return. The followin

|

||||

### Hybrid Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ❌ Not Supported | Returns have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ❌ Not Supported | Results have vector(`_distance`) and FTS(`score`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

### Vector Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have vector(`_distance`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ✅ Supported | Results have vector(`_distance`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

### FTS Search

|

||||

|`return_score`| Status | Description |

|

||||

| --- | --- | --- |

|

||||

| `relevance` | ✅ Supported | Returns only have the `_relevance_score` column |

|

||||

| `all` | ✅ Supported | Returns have FTS(`score`) along with Hybrid Search score(`_relevance_score`) |

|

||||

| `relevance` | ✅ Supported | Results only have the `_relevance_score` column. |

|

||||

| `all` | ✅ Supported | Results have FTS(`score`) along with Hybrid Search score(`_relevance_score`). |

|

||||

|

||||

@@ -1,9 +1,9 @@

|

||||

# Linear Combination Reranker

|

||||

|

||||

!!! note

|

||||

This is depricated. It is recommended to use the `RRFReranker` instead, if you want to use a score based reranker.

|

||||

This is deprecated. It is recommended to use the `RRFReranker` instead, if you want to use a score-based reranker.

|

||||

|

||||

It combines the results of semantic and full-text search using a linear combination of the scores. The weights for the linear combination can be specified. It defaults to 0.7, i.e, 70% weight for semantic search and 30% weight for full-text search.

|

||||

The Linear Combination Reranker combines the results of semantic and full-text search using a linear combination of the scores. The weights for the linear combination can be specified, and defaults to 0.7, i.e, 70% weight for semantic search and 30% weight for full-text search.

|

||||

|

||||

!!! note

|

||||

Supported Query Types: Hybrid

|

||||

@@ -51,5 +51,5 @@ You can specify the type of scores you want the reranker to return. The followin

|

||||

### Hybrid Search

|

||||