The long-term plan is to make LayerMap an immutable data structure that is

multi-versioned. Meaning, we will not modify LayerMap in place but create

a (cheap) copy and modify that copy. Once we're done making modifications,

we make the copy available to readers through the SeqWait.

The modifications will be made by a _single_ task, the pageserver actor.

But _many_ readers can wait_for & use same or multiple versions of the LayerMap.

So, there's a new method `split_spmc` that splits up a `SeqWait` into a

not-clonable producer (Advance) and a clonable consumer (Wait).

(SeqWait itself is mpmc, but, for the actor architecture, it makes sense

to enforce spmc in the type system)

# Please enter the commit message for your changes. Lines starting

With this commit one can request compute reconfiguration

from the running `compute_ctl` with compute in `Running` state

by sending a new spec:

```shell

curl -d "{\"spec\": $(cat ./compute-spec-new.json)}" http://localhost:3080/configure

```

Internally, we start a separate configurator thread that is waiting on

`Condvar` for `ConfigurationPending` compute state in a loop. Then it does

reconfiguration, sets compute back to `Running` state and notifies other

waiters.

It will need some follow-ups, e.g. for retry logic for control-plane

requests, but should be useful for testing in the current state. This

shouldn't affect any existing environment, since computes are configured

in a different way there.

Resolvesneondatabase/cloud#4433

Reason and backtrace are added to the Broken state. Backtrace is automatically collected when tenant entered the broken state. The format for API, CLI and metrics is changed and unified to return tenant state name in camel case. Previously snake case was used for metrics and camel case was used for everything else. Now tenant state field in TenantInfo swagger spec is changed to contain state name in "slug" field and other fields (currently only reason and backtrace for Broken variant in "data" field). To allow for this breaking change state was removed from TenantInfo swagger spec because it was not used anywhere.

Please note that the tenant's broken reason is not persisted on disk so the reason is lost when pageserver is restarted.

Requires changes to grafana dashboard that monitors tenant states.

Closes#3001

---------

Co-authored-by: theirix <theirix@gmail.com>

All non-trivial updates extracted into separate commits, also `carho

hakari` data and its manifest format were updated.

3 sets of crates remain unupdated:

* `base64` — touches proxy in a lot of places and changed its api (by

0.21 version) quite strongly since our version (0.13).

* `opentelemetry` and `opentelemetry-*` crates

```

error[E0308]: mismatched types

--> libs/tracing-utils/src/http.rs:65:21

|

65 | span.set_parent(parent_ctx);

| ---------- ^^^^^^^^^^ expected struct `opentelemetry_api::context::Context`, found struct `opentelemetry::Context`

| |

| arguments to this method are incorrect

|

= note: struct `opentelemetry::Context` and struct `opentelemetry_api::context::Context` have similar names, but are actually distinct types

note: struct `opentelemetry::Context` is defined in crate `opentelemetry_api`

--> /Users/someonetoignore/.cargo/registry/src/github.com-1ecc6299db9ec823/opentelemetry_api-0.19.0/src/context.rs:77:1

|

77 | pub struct Context {

| ^^^^^^^^^^^^^^^^^^

note: struct `opentelemetry_api::context::Context` is defined in crate `opentelemetry_api`

--> /Users/someonetoignore/.cargo/registry/src/github.com-1ecc6299db9ec823/opentelemetry_api-0.18.0/src/context.rs:77:1

|

77 | pub struct Context {

| ^^^^^^^^^^^^^^^^^^

= note: perhaps two different versions of crate `opentelemetry_api` are being used?

note: associated function defined here

--> /Users/someonetoignore/.cargo/registry/src/github.com-1ecc6299db9ec823/tracing-opentelemetry-0.18.0/src/span_ext.rs:43:8

|

43 | fn set_parent(&self, cx: Context);

| ^^^^^^^^^^

For more information about this error, try `rustc --explain E0308`.

error: could not compile `tracing-utils` due to previous error

warning: build failed, waiting for other jobs to finish...

error: could not compile `tracing-utils` due to previous error

```

`tracing-opentelemetry` of version `0.19` is not yet released, that is

supposed to have the update we need.

* similarly, `rustls`, `tokio-rustls`, `rustls-*` and `tls-listener`

crates have similar issue:

```

error[E0308]: mismatched types

--> libs/postgres_backend/tests/simple_select.rs:112:78

|

112 | let mut make_tls_connect = tokio_postgres_rustls::MakeRustlsConnect::new(client_cfg);

| --------------------------------------------- ^^^^^^^^^^ expected struct `rustls::client::client_conn::ClientConfig`, found struct `ClientConfig`

| |

| arguments to this function are incorrect

|

= note: struct `ClientConfig` and struct `rustls::client::client_conn::ClientConfig` have similar names, but are actually distinct types

note: struct `ClientConfig` is defined in crate `rustls`

--> /Users/someonetoignore/.cargo/registry/src/github.com-1ecc6299db9ec823/rustls-0.21.0/src/client/client_conn.rs:125:1

|

125 | pub struct ClientConfig {

| ^^^^^^^^^^^^^^^^^^^^^^^

note: struct `rustls::client::client_conn::ClientConfig` is defined in crate `rustls`

--> /Users/someonetoignore/.cargo/registry/src/github.com-1ecc6299db9ec823/rustls-0.20.8/src/client/client_conn.rs:91:1

|

91 | pub struct ClientConfig {

| ^^^^^^^^^^^^^^^^^^^^^^^

= note: perhaps two different versions of crate `rustls` are being used?

note: associated function defined here

--> /Users/someonetoignore/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-postgres-rustls-0.9.0/src/lib.rs:23:12

|

23 | pub fn new(config: ClientConfig) -> Self {

| ^^^

For more information about this error, try `rustc --explain E0308`.

error: could not compile `postgres_backend` due to previous error

warning: build failed, waiting for other jobs to finish...

```

* aws crates: I could not make new API to work with bucket endpoint

overload, and console e2e tests failed.

Other our tests passed, further investigation is worth to be done in

https://github.com/neondatabase/neon/issues/4008

Sometimes, it contained real values, sometimes just defaults if the

spec was not received yet. Make the state more clear by making it an

Option instead.

One consequence is that if some of the required settings like

neon.tenant_id are missing from the spec file sent to the /configure

endpoint, it is spotted earlier and you get an immediate HTTP error

response. Not that it matters very much, but it's nicer nevertheless.

TCP_KEEPALIVE is not enabled by default, so this prevents hanged up connections

in case of abrupt client termination. Add 'closed' flag to PostgresBackendReader

and pass it during handles join to prevent attempts to read from socket if we

errored out previously -- now with timeouts this is a common situation.

It looks like

2023-04-10T18:08:37.493448Z INFO {cid=68}:WAL

receiver{ttid=59f91ad4e821ab374f9ccdf918da3a85/16438f99d61572c72f0c7b0ed772785d}:

terminated: timed out

Presumably fixes https://github.com/neondatabase/neon/issues/3971

This is in preparation of using compute_ctl to launch postgres nodes

in the neon_local control plane. And seems like a good idea to

separate the public interfaces anyway.

One non-mechanical change here is that the 'metrics' field is moved

under the Mutex, instead of using atomics. We were not using atomics

for performance but for convenience here, and it seems more clear to

not use atomics in the model for the HTTP response type.

Replaces `Box<(dyn io::AsyncRead + Unpin + Send + Sync + 'static)>` with

`impl io::AsyncRead + Unpin + Send + Sync + 'static` usages in the

`RemoteStorage` interface, to make it closer to

[`#![feature(async_fn_in_trait)]`](https://blog.rust-lang.org/inside-rust/2022/11/17/async-fn-in-trait-nightly.html)

For `GenericRemoteStorage`, replaces `type Target = dyn RemoteStorage`

with another impl with `RemoteStorage` methods inside it.

We can reuse the trait, that would require importing the trait in every

file where it's used and makes us farther from the unstable feature.

After this PR, I've manged to create a patch with the changes:

https://github.com/neondatabase/neon/compare/kb/less-dyn-storage...kb/nightly-async-trait?expand=1

Current rust implementation does not like recursive async trait calls,

so `UnreliableWrapper` was removed: it contained a

`GenericRemoteStorage` that implemented the `RemoteStorage` trait, and

itself implemented the trait, which nightly rustc did not like and

proposed to box the future.

Similarly, `GenericRemoteStorage` cannot implement `RemoteStorage` for

nightly rustc to work, since calls various remote storages' methods from

inside.

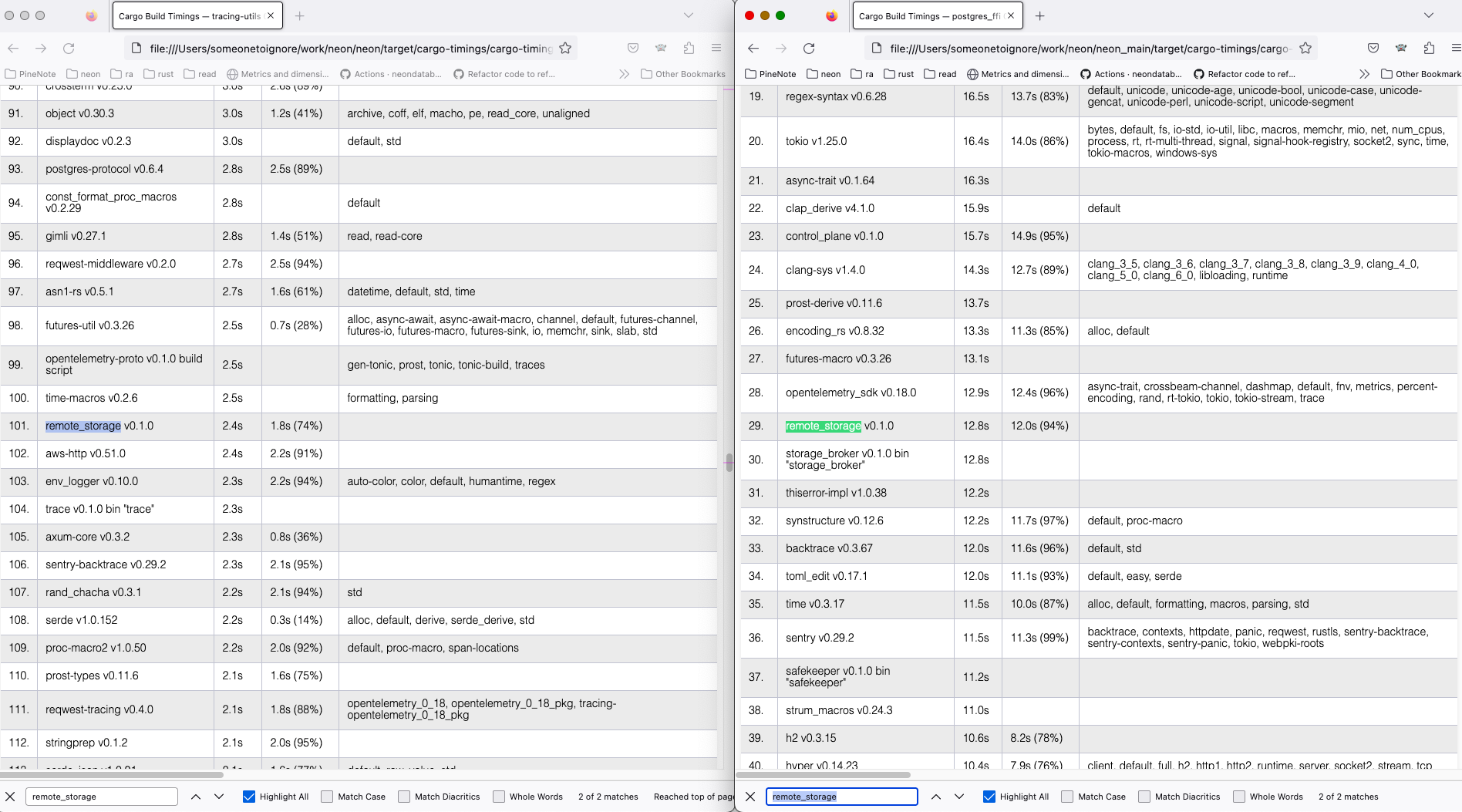

I've compiled current `main` and the nightly branch both with `time env

RUSTC_WRAPPER="" cargo +nightly build --all --timings` command, and got

```

Finished dev [optimized + debuginfo] target(s) in 2m 04s

env RUSTC_WRAPPER="" cargo +nightly build --all --timings 1283.19s user 50.40s system 1074% cpu 2:04.15 total

for the new feature tried and

Finished dev [optimized + debuginfo] target(s) in 2m 40s

env RUSTC_WRAPPER="" cargo +nightly build --all --timings 1288.59s user 52.06s system 834% cpu 2:40.71 total

for the old async_trait approach.

```

On my machine, the `remote_storage` lib compilation takes ~10 less time

with the nightly feature (left) than the regular main (right).

Full cargo reports are available at

[timings.zip](https://github.com/neondatabase/neon/files/11179369/timings.zip)

in real env testing we noted that the disk-usage based eviction sails 1

percentage point above the configured value, which might be a source of

confusion, so it might be better to get rid of that confusion now.

confusion: "I configured 85% but pageserver sails at 86%".

Co-authored-by: Christian Schwarz <christian@neon.tech>

In S3, pageserver only lists tenants (prefixes) on S3, no other keys.

Remove the list operation from the API, since S3 impl does not seem to

work normally and not used anyway,

This is the the feedback originating from pageserver, so change previous

confusing names to

s/ReplicationFeedback/PageserverFeedback

s/ps_writelsn/last_receive_lsn

s/ps_flushlsn/disk_consistent_lsn

s/ps_apply_lsn/remote_consistent_lsn

I haven't changed on the wire format to keep compatibility. However,

understanding of new field names is added to compute, so once all computes

receive this patch we can change the wire names as well. Safekeepers/pageservers

are deployed roughly at the same time and it is ok to live without feedbacks

during the short period, so this is not a problem there.

This patch adds a pageserver-global background loop that evicts layers

in response to a shortage of available bytes in the $repo/tenants

directory's filesystem.

The loop runs periodically at a configurable `period`.

Each loop iteration uses `statvfs` to determine filesystem-level space

usage. It compares the returned usage data against two different types

of thresholds. The iteration tries to evict layers until app-internal

accounting says we should be below the thresholds. We cross-check this

internal accounting with the real world by making another `statvfs` at

the end of the iteration. We're good if that second statvfs shows that

we're _actually_ below the configured thresholds. If we're still above

one or more thresholds, we emit a warning log message, leaving it to the

operator to investigate further.

There are two thresholds:

- `max_usage_pct` is the relative available space, expressed in percent

of the total filesystem space. If the actual usage is higher, the

threshold is exceeded.

- `min_avail_bytes` is the absolute available space in bytes. If the

actual usage is lower, the threshold is exceeded.

The iteration evicts layers in LRU fashion with a reservation of up to

`tenant_min_resident_size` bytes of the most recent layers per tenant.

The layers not part of the per-tenant reservation are evicted

least-recently-used first until we're below all thresholds. The

`tenant_min_resident_size` can be overridden per tenant as

`min_resident_size_override` (bytes).

In addition to the loop, there is also an HTTP endpoint to perform one

loop iteration synchronous to the request. The endpoint takes an

absolute number of bytes that the iteration needs to evict before

pressure is relieved. The tests use this endpoint, which is a great

simplification over setting up loopback-mounts in the tests, which would

be required to test the statvfs part of the implementation. We will rely

on manual testing in staging to test the statvfs parts.

The HTTP endpoint is also handy in emergencies where an operator wants

the pageserver to evict a given amount of space _now. Hence, it's

arguments documented in openapi_spec.yml. The response type isn't

documented though because we don't consider it stable. The endpoint

should _not_ be used by Console but it could be used by on-call.

Co-authored-by: Joonas Koivunen <joonas@neon.tech>

Co-authored-by: Dmitry Rodionov <dmitry@neon.tech>

Co-authored-by: Heikki Linnakangas <heikki@neon.tech>

neon_local sends SIGQUIT, which otherwise dumps core by default. Also, remove

obsolete install_shutdown_handlers; in all binaries it was overridden by

ShutdownSignals::handle later.

ref https://github.com/neondatabase/neon/issues/3847

The PR enforces current newest `index_part.json` format in the type

system (version `1`), not allowing any previous forms of it, that were

used in the past.

Similarly, the code to mitigate the

https://github.com/neondatabase/neon/issues/3024 issue is now also

removed.

Current code does not produce old formats and extra files in the

index_part.json, in the future we will be able to use

https://github.com/neondatabase/aversion or other approach to make

version transitions more explicit.

See https://neondb.slack.com/archives/C033RQ5SPDH/p1679134185248119 for

the justification on the breaking changes.

The control plane currently only supports EdDSA. We need to either teach

the storage to use EdDSA, or the control plane to use RSA. EdDSA is more

modern, so let's use that.

We could support both, but it would require a little more code and tests,

and we don't really need the flexibility since we control both sides.

Otherwise they get lost. Normally buffer is empty before proxy pass, but this is

not the case with pipeline mode of out npm driver; fixes connection hangup

introduced by b80fe41af3 for it.

fixes https://github.com/neondatabase/neon/issues/3822

- handle automatically fixable future clippies

- tune run-clippy.sh to remove macos specifics which we no longer have

Co-authored-by: Alexander Bayandin <alexander@neon.tech>

Previously, we only accepted RS256. Seems like a pointless limitation,

and when I was testing it with RS512 tokens, it took me a while to

understand why it wasn't working.

1) Remove allocation and data copy during each message read. Instead, parsing

functions now accept BytesMut from which data they form messages, with

pointers (e.g. in CopyData) pointing directly into BytesMut buffer. Accordingly,

move ConnectionError containing IO error subtype into framed.rs providing this

and leave in pq_proto only ProtocolError.

2) Remove anyhow from pq_proto.

3) Move FeStartupPacket out of FeMessage. Now FeStartupPacket::parse returns it

directly, eliminating dead code where user wants startup packet but has to match

for others.

proxy stream.rs is adapted to framed.rs with minimal changes. It also benefits

from framed.rs improvements described above.