mirror of

https://github.com/neondatabase/neon.git

synced 2026-01-16 18:02:56 +00:00

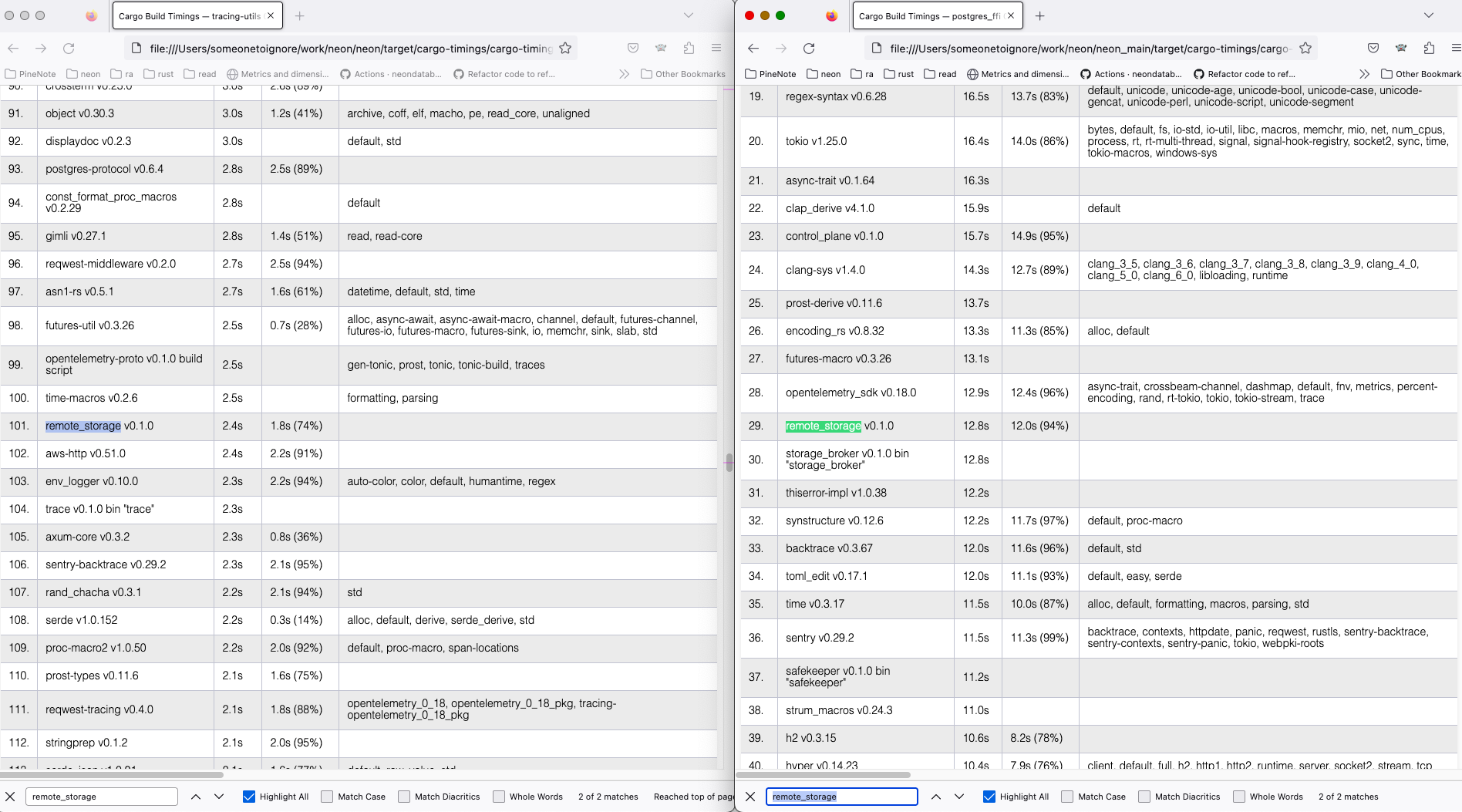

Replaces `Box<(dyn io::AsyncRead + Unpin + Send + Sync + 'static)>` with `impl io::AsyncRead + Unpin + Send + Sync + 'static` usages in the `RemoteStorage` interface, to make it closer to [`#![feature(async_fn_in_trait)]`](https://blog.rust-lang.org/inside-rust/2022/11/17/async-fn-in-trait-nightly.html) For `GenericRemoteStorage`, replaces `type Target = dyn RemoteStorage` with another impl with `RemoteStorage` methods inside it. We can reuse the trait, that would require importing the trait in every file where it's used and makes us farther from the unstable feature. After this PR, I've manged to create a patch with the changes: https://github.com/neondatabase/neon/compare/kb/less-dyn-storage...kb/nightly-async-trait?expand=1 Current rust implementation does not like recursive async trait calls, so `UnreliableWrapper` was removed: it contained a `GenericRemoteStorage` that implemented the `RemoteStorage` trait, and itself implemented the trait, which nightly rustc did not like and proposed to box the future. Similarly, `GenericRemoteStorage` cannot implement `RemoteStorage` for nightly rustc to work, since calls various remote storages' methods from inside. I've compiled current `main` and the nightly branch both with `time env RUSTC_WRAPPER="" cargo +nightly build --all --timings` command, and got ``` Finished dev [optimized + debuginfo] target(s) in 2m 04s env RUSTC_WRAPPER="" cargo +nightly build --all --timings 1283.19s user 50.40s system 1074% cpu 2:04.15 total for the new feature tried and Finished dev [optimized + debuginfo] target(s) in 2m 40s env RUSTC_WRAPPER="" cargo +nightly build --all --timings 1288.59s user 52.06s system 834% cpu 2:40.71 total for the old async_trait approach. ``` On my machine, the `remote_storage` lib compilation takes ~10 less time with the nightly feature (left) than the regular main (right).  Full cargo reports are available at [timings.zip](https://github.com/neondatabase/neon/files/11179369/timings.zip)

123 lines

4.1 KiB

Rust

123 lines

4.1 KiB

Rust

//! This module provides a wrapper around a real RemoteStorage implementation that

|

|

//! causes the first N attempts at each upload or download operatio to fail. For

|

|

//! testing purposes.

|

|

use std::collections::hash_map::Entry;

|

|

use std::collections::HashMap;

|

|

use std::sync::Mutex;

|

|

|

|

use crate::{Download, DownloadError, RemotePath, RemoteStorage, StorageMetadata};

|

|

|

|

pub struct UnreliableWrapper {

|

|

inner: crate::GenericRemoteStorage,

|

|

|

|

// This many attempts of each operation will fail, then we let it succeed.

|

|

attempts_to_fail: u64,

|

|

|

|

// Tracks how many failed attempts of each operation has been made.

|

|

attempts: Mutex<HashMap<RemoteOp, u64>>,

|

|

}

|

|

|

|

/// Used to identify retries of different unique operation.

|

|

#[derive(Debug, Hash, Eq, PartialEq)]

|

|

enum RemoteOp {

|

|

ListPrefixes(Option<RemotePath>),

|

|

Upload(RemotePath),

|

|

Download(RemotePath),

|

|

Delete(RemotePath),

|

|

}

|

|

|

|

impl UnreliableWrapper {

|

|

pub fn new(inner: crate::GenericRemoteStorage, attempts_to_fail: u64) -> Self {

|

|

assert!(attempts_to_fail > 0);

|

|

UnreliableWrapper {

|

|

inner,

|

|

attempts_to_fail,

|

|

attempts: Mutex::new(HashMap::new()),

|

|

}

|

|

}

|

|

|

|

///

|

|

/// Common functionality for all operations.

|

|

///

|

|

/// On the first attempts of this operation, return an error. After 'attempts_to_fail'

|

|

/// attempts, let the operation go ahead, and clear the counter.

|

|

///

|

|

fn attempt(&self, op: RemoteOp) -> Result<u64, DownloadError> {

|

|

let mut attempts = self.attempts.lock().unwrap();

|

|

|

|

match attempts.entry(op) {

|

|

Entry::Occupied(mut e) => {

|

|

let attempts_before_this = {

|

|

let p = e.get_mut();

|

|

*p += 1;

|

|

*p

|

|

};

|

|

|

|

if attempts_before_this >= self.attempts_to_fail {

|

|

// let it succeed

|

|

e.remove();

|

|

Ok(attempts_before_this)

|

|

} else {

|

|

let error =

|

|

anyhow::anyhow!("simulated failure of remote operation {:?}", e.key());

|

|

Err(DownloadError::Other(error))

|

|

}

|

|

}

|

|

Entry::Vacant(e) => {

|

|

let error = anyhow::anyhow!("simulated failure of remote operation {:?}", e.key());

|

|

e.insert(1);

|

|

Err(DownloadError::Other(error))

|

|

}

|

|

}

|

|

}

|

|

}

|

|

|

|

#[async_trait::async_trait]

|

|

impl RemoteStorage for UnreliableWrapper {

|

|

async fn list_prefixes(

|

|

&self,

|

|

prefix: Option<&RemotePath>,

|

|

) -> Result<Vec<RemotePath>, DownloadError> {

|

|

self.attempt(RemoteOp::ListPrefixes(prefix.cloned()))?;

|

|

self.inner.list_prefixes(prefix).await

|

|

}

|

|

|

|

async fn upload(

|

|

&self,

|

|

data: impl tokio::io::AsyncRead + Unpin + Send + Sync + 'static,

|

|

// S3 PUT request requires the content length to be specified,

|

|

// otherwise it starts to fail with the concurrent connection count increasing.

|

|

data_size_bytes: usize,

|

|

to: &RemotePath,

|

|

metadata: Option<StorageMetadata>,

|

|

) -> anyhow::Result<()> {

|

|

self.attempt(RemoteOp::Upload(to.clone()))?;

|

|

self.inner.upload(data, data_size_bytes, to, metadata).await

|

|

}

|

|

|

|

async fn download(&self, from: &RemotePath) -> Result<Download, DownloadError> {

|

|

self.attempt(RemoteOp::Download(from.clone()))?;

|

|

self.inner.download(from).await

|

|

}

|

|

|

|

async fn download_byte_range(

|

|

&self,

|

|

from: &RemotePath,

|

|

start_inclusive: u64,

|

|

end_exclusive: Option<u64>,

|

|

) -> Result<Download, DownloadError> {

|

|

// Note: We treat any download_byte_range as an "attempt" of the same

|

|

// operation. We don't pay attention to the ranges. That's good enough

|

|

// for now.

|

|

self.attempt(RemoteOp::Download(from.clone()))?;

|

|

self.inner

|

|

.download_byte_range(from, start_inclusive, end_exclusive)

|

|

.await

|

|

}

|

|

|

|

async fn delete(&self, path: &RemotePath) -> anyhow::Result<()> {

|

|

self.attempt(RemoteOp::Delete(path.clone()))?;

|

|

self.inner.delete(path).await

|

|

}

|

|

}

|