* refactor(cli): unify storage configuration for export command - Utilize ObjectStoreConfig to unify storage configuration for export command - Support export command for Fs, S3, OSS, GCS and Azblob - Fix the Display implementation for SecretString always returned the string "SecretString([REDACTED])" even when the internal secret was empty. Signed-off-by: McKnight22 <tao.wang.22@outlook.com> * refactor(cli): unify storage configuration for export command - Change the encapsulation permissions of each configuration options for every storage backend to public access. Signed-off-by: McKnight22 <tao.wang.22@outlook.com> Co-authored-by: WenyXu <wenymedia@gmail.com> * refactor(cli): unify storage configuration for export command - Update the implementation of ObjectStoreConfig::build_xxx() using macro solutions Signed-off-by: McKnight22 <tao.wang.22@outlook.com> Co-authored-by: WenyXu <wenymedia@gmail.com> * refactor(cli): unify storage configuration for export command - Introduce config validation for each storage type Signed-off-by: McKnight22 <tao.wang.22@outlook.com> * refactor(cli): unify storage configuration for export command - Enable trait-based polymorphism for storage type handling (from inherent impl to trait impl) - Extract helper functions to reduce code duplication Signed-off-by: McKnight22 <tao.wang.22@outlook.com> * refactor(cli): unify storage configuration for export command - Improve SecretString handling and validation (Distinguishing between "not provided" and "empty string") - Add validation when using filesystem storage Signed-off-by: McKnight22 <tao.wang.22@outlook.com> * refactor(cli): unify storage configuration for export command - Refactor storage field validation with macro Signed-off-by: McKnight22 <tao.wang.22@outlook.com> * refactor(cli): unify storage configuration for export command - support GCS Application Default Credentials (like GKE, Cloud Run, or local development with ) in export (Enabling ADC without validating or to be present) (Making optional in GCS validation (defaults to https://storage.googleapis.com)) Signed-off-by: McKnight22 <tao.wang.22@outlook.com> * refactor(cli): unify storage configuration for export command This commit refactors the validation logic for object store configurations in the CLI to leverage clap features and reduce boilerplate. Key changes: - Update wrap_with_clap_prefix macro to use clap's requires attribute. This ensures that storage-specific options (e.g., --s3-bucket) are only accepted when the corresponding backend is enabled (e.g., --s3). - Simplify FieldValidator trait by removing the is_provided method, as dependency checks are now handled by clap. - Introduce validate_backend! macro to standardize the validation of required fields for enabled backends. - Refactor ExportCommand to remove explicit validation calls (validate_s3, etc.) and rely on the validation within backend constructors. - Add integration tests for ExportCommand to verify build success with S3, OSS, GCS, and Azblob configurations. Signed-off-by: McKnight22 <tao.wang.22@outlook.com> * refactor(cli): unify storage configuration for export command - Use macros to simplify storage export implementation Signed-off-by: McKnight22 <tao.wang.22@outlook.com> Co-authored-by: WenyXu <wenymedia@gmail.com> * refactor(cli): unify storage configuration for export command - Rollback StorageExport trait implementation to not using macro for better code clarity and maintainability - Introduce format_uri helper function to unify URI formatting logic - Fix OSS URI path bug inherited from legacy code Signed-off-by: McKnight22 <tao.wang.22@outlook.com> Co-authored-by: WenyXu <wenymedia@gmail.com> * refactor(cli): unify storage configuration for export command - Remove unnecessary async_trait Signed-off-by: McKnight22 <tao.wang.22@outlook.com> Co-authored-by: jeremyhi <jiachun_feng@proton.me> --------- Signed-off-by: McKnight22 <tao.wang.22@outlook.com> Co-authored-by: WenyXu <wenymedia@gmail.com> Co-authored-by: jeremyhi <jiachun_feng@proton.me>

greptime_memory_limit_in_bytes and greptime_cpu_limit_in_millicores metrics (#7043)

Real-Time & Cloud-Native Observability Database

for metrics, logs, and traces

Delivers sub-second querying at PB scale and exceptional cost efficiency from edge to cloud.

- Introduction

- ⭐ Key Features

- Quick Comparison

- Architecture

- Try GreptimeDB

- Getting Started

- Build From Source

- Tools & Extensions

- Project Status

- Community

- License

- Commercial Support

- Contributing

- Acknowledgement

Introduction

GreptimeDB is an open-source, cloud-native database that unifies metrics, logs, and traces, enabling real-time observability at any scale — across edge, cloud, and hybrid environments.

Features

| Feature | Description |

|---|---|

| All-in-One Observability | OpenTelemetry-native platform unifying metrics, logs, and traces. Query via SQL, PromQL, and Flow. |

| High Performance | Written in Rust with rich indexing (inverted, fulltext, skipping, vector), delivering sub-second responses at PB scale. |

| Cost Efficiency | 50x lower operational and storage costs with compute-storage separation and native object storage (S3, Azure Blob, etc.). |

| Cloud-Native & Scalable | Purpose-built for Kubernetes with unlimited cross-cloud scaling, handling hundreds of thousands of concurrent requests. |

| Developer-Friendly | SQL/PromQL interfaces, built-in web dashboard, REST API, MySQL/PostgreSQL protocol compatibility, and native OpenTelemetry support. |

| Flexible Deployment | Deploy anywhere from ARM-based edge devices (including Android) to cloud, with unified APIs and efficient data sync. |

✅ Perfect for:

- Unified observability stack replacing Prometheus + Loki + Tempo

- Large-scale metrics with high cardinality (millions to billions of time series)

- Large-scale observability platform requiring cost efficiency and scalability

- IoT and edge computing with resource and bandwidth constraints

Learn more in Why GreptimeDB and Observability 2.0 and the Database for It.

Quick Comparison

| Feature | GreptimeDB | Traditional TSDB | Log Stores |

|---|---|---|---|

| Data Types | Metrics, Logs, Traces | Metrics only | Logs only |

| Query Language | SQL, PromQL | Custom/PromQL | Custom/DSL |

| Deployment | Edge + Cloud | Cloud/On-prem | Mostly central |

| Indexing & Performance | PB-Scale, Sub-second | Varies | Varies |

| Integration | REST API, SQL, Common protocols | Varies | Varies |

Performance:

Read more benchmark reports.

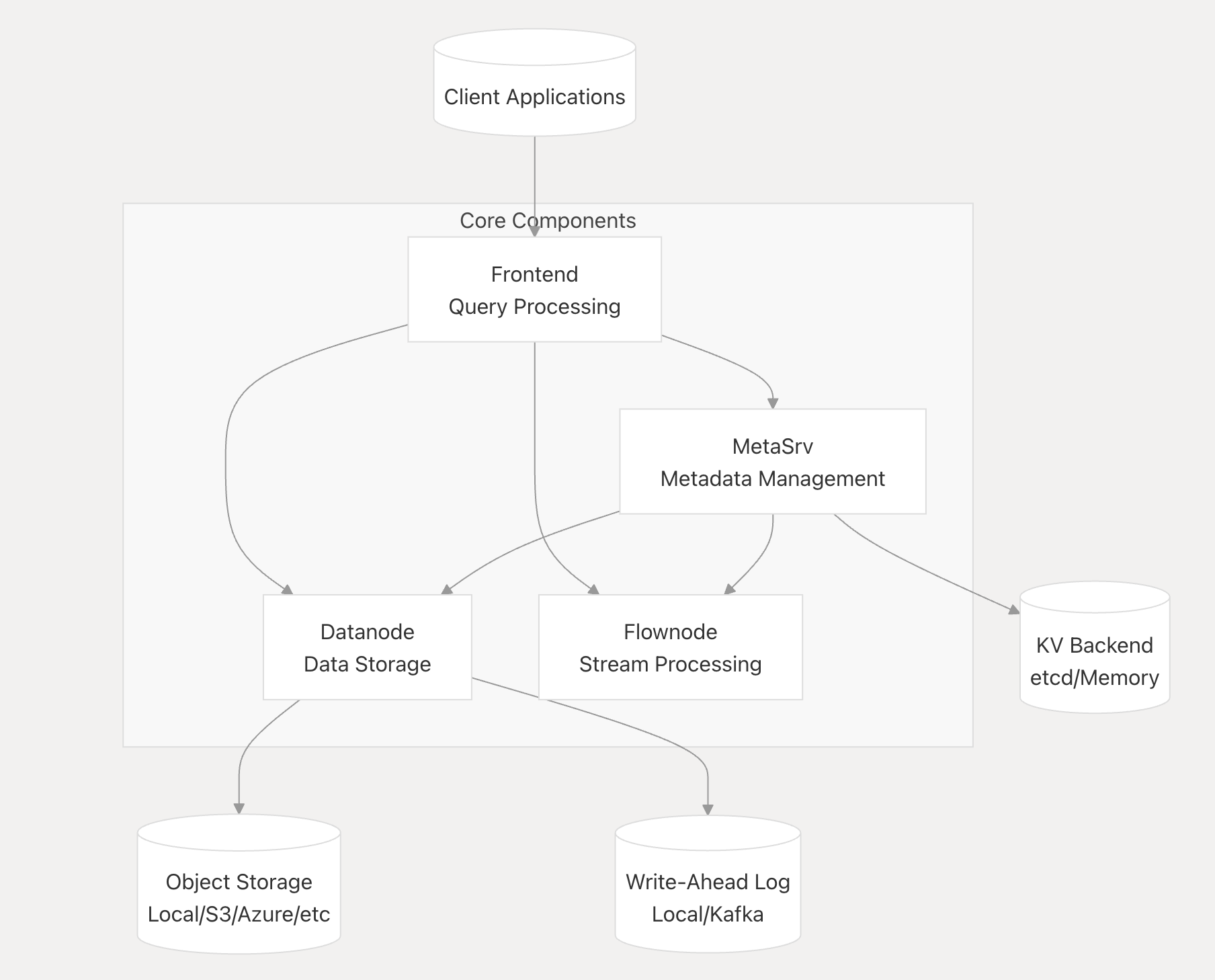

Architecture

GreptimeDB can run in two modes:

- Standalone Mode - Single binary for development and small deployments

- Distributed Mode - Separate components for production scale:

- Frontend: Query processing and protocol handling

- Datanode: Data storage and retrieval

- Metasrv: Metadata management and coordination

Read the architecture document. DeepWiki provides an in-depth look at GreptimeDB:

Try GreptimeDB

docker pull greptime/greptimedb

docker run -p 127.0.0.1:4000-4003:4000-4003 \

-v "$(pwd)/greptimedb_data:/greptimedb_data" \

--name greptime --rm \

greptime/greptimedb:latest standalone start \

--http-addr 0.0.0.0:4000 \

--rpc-bind-addr 0.0.0.0:4001 \

--mysql-addr 0.0.0.0:4002 \

--postgres-addr 0.0.0.0:4003

Dashboard: http://localhost:4000/dashboard

Read more in the full Install Guide.

Troubleshooting:

- Cannot connect to the database? Ensure that ports

4000,4001,4002, and4003are not blocked by a firewall or used by other services. - Failed to start? Check the container logs with

docker logs greptimefor further details.

Getting Started

Build From Source

Prerequisites:

- Rust toolchain (nightly)

- Protobuf compiler (>= 3.15)

- C/C++ building essentials, including

gcc/g++/autoconfand glibc library (eg.libc6-devon Ubuntu andglibc-develon Fedora) - Python toolchain (optional): Required only if using some test scripts.

Build and Run:

make

cargo run -- standalone start

Tools & Extensions

- Kubernetes: GreptimeDB Operator

- Helm Charts: Greptime Helm Charts

- Dashboard: Web UI

- gRPC Ingester: Go, Java, C++, Erlang, Rust

- Grafana Data Source: GreptimeDB Grafana data source plugin

- Grafana Dashboard: Official Dashboard for monitoring

Project Status

Status: Beta — marching toward v1.0 GA! GA (v1.0): January 10, 2026

- Deployed in production by open-source projects and commercial users

- Stable, actively maintained, with regular releases (version info)

- Suitable for evaluation and pilot deployments

GreptimeDB v1.0 represents a major milestone toward maturity — marking stable APIs, production readiness, and proven performance.

Roadmap: Beta1 (Nov 10) → Beta2 (Nov 24) → RC1 (Dec 8) → GA (Jan 10, 2026), please read v1.0 highlights and release plan for details.

For production use, we recommend using the latest stable release.

If you find this project useful, a ⭐ would mean a lot to us!

Community

We invite you to engage and contribute!

License

GreptimeDB is licensed under the Apache License 2.0.

Commercial Support

Running GreptimeDB in your organization? We offer enterprise add-ons, services, training, and consulting. Contact us for details.

Contributing

- Read our Contribution Guidelines.

- Explore Internal Concepts and DeepWiki.

- Pick up a good first issue and join the #contributors Slack channel.

Acknowledgement

Special thanks to all contributors! See AUTHORS.md.

- Uses Apache Arrow™ (memory model)

- Apache Parquet™ (file storage)

- Apache DataFusion™ (query engine)

- Apache OpenDAL™ (data access abstraction)