mirror of

https://github.com/lancedb/lancedb.git

synced 2026-01-03 18:32:55 +00:00

Merge branch 'main' of https://github.com/lancedb/lancedb into yang/relative-lance-dep

This commit is contained in:

@@ -1,5 +1,5 @@

|

||||

[tool.bumpversion]

|

||||

current_version = "0.10.0-beta.1"

|

||||

current_version = "0.11.0-beta.1"

|

||||

parse = """(?x)

|

||||

(?P<major>0|[1-9]\\d*)\\.

|

||||

(?P<minor>0|[1-9]\\d*)\\.

|

||||

@@ -24,34 +24,56 @@ commit = true

|

||||

message = "Bump version: {current_version} → {new_version}"

|

||||

commit_args = ""

|

||||

|

||||

# Java maven files

|

||||

pre_commit_hooks = [

|

||||

"""

|

||||

NEW_VERSION="${BVHOOK_NEW_MAJOR}.${BVHOOK_NEW_MINOR}.${BVHOOK_NEW_PATCH}"

|

||||

if [ ! -z "$BVHOOK_NEW_PRE_L" ] && [ ! -z "$BVHOOK_NEW_PRE_N" ]; then

|

||||

NEW_VERSION="${NEW_VERSION}-${BVHOOK_NEW_PRE_L}.${BVHOOK_NEW_PRE_N}"

|

||||

fi

|

||||

echo "Constructed new version: $NEW_VERSION"

|

||||

cd java && mvn versions:set -DnewVersion=$NEW_VERSION && mvn versions:commit

|

||||

|

||||

# Check for any modified but unstaged pom.xml files

|

||||

MODIFIED_POMS=$(git ls-files -m | grep pom.xml)

|

||||

if [ ! -z "$MODIFIED_POMS" ]; then

|

||||

echo "The following pom.xml files were modified but not staged. Adding them now:"

|

||||

echo "$MODIFIED_POMS" | while read -r file; do

|

||||

git add "$file"

|

||||

echo "Added: $file"

|

||||

done

|

||||

fi

|

||||

""",

|

||||

]

|

||||

|

||||

[tool.bumpversion.parts.pre_l]

|

||||

values = ["beta", "final"]

|

||||

optional_value = "final"

|

||||

values = ["beta", "final"]

|

||||

|

||||

[[tool.bumpversion.files]]

|

||||

filename = "node/package.json"

|

||||

search = "\"version\": \"{current_version}\","

|

||||

replace = "\"version\": \"{new_version}\","

|

||||

search = "\"version\": \"{current_version}\","

|

||||

|

||||

[[tool.bumpversion.files]]

|

||||

filename = "nodejs/package.json"

|

||||

search = "\"version\": \"{current_version}\","

|

||||

replace = "\"version\": \"{new_version}\","

|

||||

search = "\"version\": \"{current_version}\","

|

||||

|

||||

# nodejs binary packages

|

||||

[[tool.bumpversion.files]]

|

||||

glob = "nodejs/npm/*/package.json"

|

||||

search = "\"version\": \"{current_version}\","

|

||||

replace = "\"version\": \"{new_version}\","

|

||||

search = "\"version\": \"{current_version}\","

|

||||

|

||||

# Cargo files

|

||||

# ------------

|

||||

[[tool.bumpversion.files]]

|

||||

filename = "rust/ffi/node/Cargo.toml"

|

||||

search = "\nversion = \"{current_version}\""

|

||||

replace = "\nversion = \"{new_version}\""

|

||||

search = "\nversion = \"{current_version}\""

|

||||

|

||||

[[tool.bumpversion.files]]

|

||||

filename = "rust/lancedb/Cargo.toml"

|

||||

search = "\nversion = \"{current_version}\""

|

||||

replace = "\nversion = \"{new_version}\""

|

||||

search = "\nversion = \"{current_version}\""

|

||||

|

||||

5

.github/workflows/java-publish.yml

vendored

5

.github/workflows/java-publish.yml

vendored

@@ -94,11 +94,16 @@ jobs:

|

||||

mkdir -p ./core/target/classes/nativelib/darwin-aarch64 ./core/target/classes/nativelib/linux-aarch64

|

||||

cp ../liblancedb_jni_darwin_aarch64.zip/liblancedb_jni.dylib ./core/target/classes/nativelib/darwin-aarch64/liblancedb_jni.dylib

|

||||

cp ../liblancedb_jni_linux_aarch64.zip/liblancedb_jni.so ./core/target/classes/nativelib/linux-aarch64/liblancedb_jni.so

|

||||

- name: Dry run

|

||||

if: github.event_name == 'pull_request'

|

||||

run: |

|

||||

mvn --batch-mode -DskipTests package

|

||||

- name: Set github

|

||||

run: |

|

||||

git config --global user.email "LanceDB Github Runner"

|

||||

git config --global user.name "dev+gha@lancedb.com"

|

||||

- name: Publish with Java 8

|

||||

if: github.event_name == 'release'

|

||||

run: |

|

||||

echo "use-agent" >> ~/.gnupg/gpg.conf

|

||||

echo "pinentry-mode loopback" >> ~/.gnupg/gpg.conf

|

||||

|

||||

2

.github/workflows/make-release-commit.yml

vendored

2

.github/workflows/make-release-commit.yml

vendored

@@ -30,7 +30,7 @@ on:

|

||||

default: true

|

||||

type: boolean

|

||||

other:

|

||||

description: 'Make a Node/Rust release'

|

||||

description: 'Make a Node/Rust/Java release'

|

||||

required: true

|

||||

default: true

|

||||

type: boolean

|

||||

|

||||

3

.github/workflows/rust.yml

vendored

3

.github/workflows/rust.yml

vendored

@@ -30,7 +30,6 @@ jobs:

|

||||

defaults:

|

||||

run:

|

||||

shell: bash

|

||||

working-directory: rust

|

||||

env:

|

||||

# Need up-to-date compilers for kernels

|

||||

CC: gcc-12

|

||||

@@ -50,7 +49,7 @@ jobs:

|

||||

- name: Run format

|

||||

run: cargo fmt --all -- --check

|

||||

- name: Run clippy

|

||||

run: cargo clippy --all --all-features -- -D warnings

|

||||

run: cargo clippy --workspace --tests --all-features -- -D warnings

|

||||

linux:

|

||||

timeout-minutes: 30

|

||||

# To build all features, we need more disk space than is available

|

||||

|

||||

12

Cargo.toml

12

Cargo.toml

@@ -20,12 +20,12 @@ keywords = ["lancedb", "lance", "database", "vector", "search"]

|

||||

categories = ["database-implementations"]

|

||||

|

||||

[workspace.dependencies]

|

||||

lance = { "version" = "=0.17.0", "features" = ["dynamodb"], path = "../lance/rust/lance"}

|

||||

lance-index = { "version" = "=0.17.0", path = "../lance/rust/lance-index"}

|

||||

lance-linalg = { "version" = "=0.17.0", path = "../lance/rust/lance-linalg"}

|

||||

lance-testing = { "version" = "=0.17.0", path = "../lance/rust/lance-testing"}

|

||||

lance-datafusion = { "version" = "=0.17.0", path = "../lance/rust/lance-datafusion"}

|

||||

lance-encoding = { "version" = "=0.17.0", path = "../lance/rust/lance-encoding"}

|

||||

lance = { "version" = "=0.18.0", "features" = ["dynamodb"], path = "../lance/rust/lance"}

|

||||

lance-index = { "version" = "=0.18.0", path = "../lance/rust/lance-index"}

|

||||

lance-linalg = { "version" = "=0.18.0", path = "../lance/rust/lance-linalg"}

|

||||

lance-testing = { "version" = "=0.18.0", path = "../lance/rust/lance-testing"}

|

||||

lance-datafusion = { "version" = "=0.18.0", path = "../lance/rust/lance-datafusion"}

|

||||

lance-encoding = { "version" = "=0.18.0", path = "../lance/rust/lance-encoding"}

|

||||

# Note that this one does not include pyarrow

|

||||

arrow = { version = "52.2", optional = false }

|

||||

arrow-array = "52.2"

|

||||

|

||||

@@ -34,6 +34,7 @@ theme:

|

||||

- navigation.footer

|

||||

- navigation.tracking

|

||||

- navigation.instant

|

||||

- content.footnote.tooltips

|

||||

icon:

|

||||

repo: fontawesome/brands/github

|

||||

annotation: material/arrow-right-circle

|

||||

@@ -65,6 +66,11 @@ plugins:

|

||||

markdown_extensions:

|

||||

- admonition

|

||||

- footnotes

|

||||

- pymdownx.critic

|

||||

- pymdownx.caret

|

||||

- pymdownx.keys

|

||||

- pymdownx.mark

|

||||

- pymdownx.tilde

|

||||

- pymdownx.details

|

||||

- pymdownx.highlight:

|

||||

anchor_linenums: true

|

||||

@@ -106,6 +112,17 @@ nav:

|

||||

- Overview: hybrid_search/hybrid_search.md

|

||||

- Comparing Rerankers: hybrid_search/eval.md

|

||||

- Airbnb financial data example: notebooks/hybrid_search.ipynb

|

||||

- RAG:

|

||||

- Vanilla RAG: rag/vanilla_rag.md

|

||||

- Multi-head RAG: rag/multi_head_rag.md

|

||||

- Corrective RAG: rag/corrective_rag.md

|

||||

- Agentic RAG: rag/agentic_rag.md

|

||||

- Graph RAG: rag/graph_rag.md

|

||||

- Self RAG: rag/self_rag.md

|

||||

- Adaptive RAG: rag/adaptive_rag.md

|

||||

- Advanced Techniques:

|

||||

- HyDE: rag/advanced_techniques/hyde.md

|

||||

- FLARE: rag/advanced_techniques/flare.md

|

||||

- Reranking:

|

||||

- Quickstart: reranking/index.md

|

||||

- Cohere Reranker: reranking/cohere.md

|

||||

@@ -127,7 +144,8 @@ nav:

|

||||

- Reranking: guides/tuning_retrievers/2_reranking.md

|

||||

- Embedding fine-tuning: guides/tuning_retrievers/3_embed_tuning.md

|

||||

- 🧬 Managing embeddings:

|

||||

- Overview: embeddings/index.md

|

||||

- Understand Embeddings: embeddings/understanding_embeddings.md

|

||||

- Get Started: embeddings/index.md

|

||||

- Embedding functions: embeddings/embedding_functions.md

|

||||

- Available models:

|

||||

- Overview: embeddings/default_embedding_functions.md

|

||||

@@ -165,6 +183,7 @@ nav:

|

||||

- Voxel51: integrations/voxel51.md

|

||||

- PromptTools: integrations/prompttools.md

|

||||

- dlt: integrations/dlt.md

|

||||

- phidata: integrations/phidata.md

|

||||

- 🎯 Examples:

|

||||

- Overview: examples/index.md

|

||||

- 🐍 Python:

|

||||

@@ -220,6 +239,17 @@ nav:

|

||||

- Overview: hybrid_search/hybrid_search.md

|

||||

- Comparing Rerankers: hybrid_search/eval.md

|

||||

- Airbnb financial data example: notebooks/hybrid_search.ipynb

|

||||

- RAG:

|

||||

- Vanilla RAG: rag/vanilla_rag.md

|

||||

- Multi-head RAG: rag/multi_head_rag.md

|

||||

- Corrective RAG: rag/corrective_rag.md

|

||||

- Agentic RAG: rag/agentic_rag.md

|

||||

- Graph RAG: rag/graph_rag.md

|

||||

- Self RAG: rag/self_rag.md

|

||||

- Adaptive RAG: rag/adaptive_rag.md

|

||||

- Advanced Techniques:

|

||||

- HyDE: rag/advanced_techniques/hyde.md

|

||||

- FLARE: rag/advanced_techniques/flare.md

|

||||

- Reranking:

|

||||

- Quickstart: reranking/index.md

|

||||

- Cohere Reranker: reranking/cohere.md

|

||||

@@ -241,7 +271,8 @@ nav:

|

||||

- Reranking: guides/tuning_retrievers/2_reranking.md

|

||||

- Embedding fine-tuning: guides/tuning_retrievers/3_embed_tuning.md

|

||||

- Managing Embeddings:

|

||||

- Overview: embeddings/index.md

|

||||

- Understand Embeddings: embeddings/understanding_embeddings.md

|

||||

- Get Started: embeddings/index.md

|

||||

- Embedding functions: embeddings/embedding_functions.md

|

||||

- Available models:

|

||||

- Overview: embeddings/default_embedding_functions.md

|

||||

@@ -275,6 +306,7 @@ nav:

|

||||

- Voxel51: integrations/voxel51.md

|

||||

- PromptTools: integrations/prompttools.md

|

||||

- dlt: integrations/dlt.md

|

||||

- phidata: integrations/phidata.md

|

||||

- Examples:

|

||||

- examples/index.md

|

||||

- 🐍 Python:

|

||||

|

||||

133

docs/src/embeddings/understanding_embeddings.md

Normal file

133

docs/src/embeddings/understanding_embeddings.md

Normal file

@@ -0,0 +1,133 @@

|

||||

# Understand Embeddings

|

||||

|

||||

The term **dimension** is a synonym for the number of elements in a feature vector. Each feature can be thought of as a different axis in a geometric space.

|

||||

|

||||

High-dimensional data means there are many features(or attributes) in the data.

|

||||

|

||||

!!! example

|

||||

1. An image is a data point and it might have thousands of dimensions because each pixel could be considered as a feature.

|

||||

|

||||

2. Text data, when represented by each word or character, can also lead to high dimensions, especially when considering all possible words in a language.

|

||||

|

||||

Embedding captures **meaning and relationships** within data by mapping high-dimensional data into a lower-dimensional space. It captures it by placing inputs that are more **similar in meaning** closer together in the **embedding space**.

|

||||

|

||||

## What are Vector Embeddings?

|

||||

|

||||

Vector embeddings is a way to convert complex data, like text, images, or audio into numerical coordinates (called vectors) that can be plotted in an n-dimensional space(embedding space).

|

||||

|

||||

The closer these data points are related in the real world, the closer their corresponding numerical coordinates (vectors) will be to each other in the embedding space. This proximity in the embedding space reflects their semantic similarities, allowing machines to intuitively understand and process the data in a way that mirrors human perception of relationships and meaning.

|

||||

|

||||

In a way, it captures the most important aspects of the data while ignoring the less important ones. As a result, tasks like searching for related content or identifying patterns become more efficient and accurate, as the embeddings make it possible to quantify how **closely related** different **data points** are and **reduce** the **computational complexity**.

|

||||

|

||||

??? question "Are vectors and embeddings the same thing?"

|

||||

|

||||

When we say “vectors” we mean - **list of numbers** that **represents the data**.

|

||||

When we say “embeddings” we mean - **list of numbers** that **capture important details and relationships**.

|

||||

|

||||

Although the terms are often used interchangeably, “embeddings” highlight how the data is represented with meaning and structure, while “vector” simply refers to the numerical form of that representation.

|

||||

|

||||

## Embedding vs Indexing

|

||||

|

||||

We already saw that creating **embeddings** on data is a method of creating **vectors** for a **n-dimensional embedding space** that captures the meaning and relationships inherent in the data.

|

||||

|

||||

Once we have these **vectors**, indexing comes into play. Indexing is a method of organizing these vector embeddings, that allows us to quickly and efficiently locate and retrieve them from the entire dataset of vector embeddings.

|

||||

|

||||

## What types of data/objects can be embedded?

|

||||

|

||||

The following are common types of data that can be embedded:

|

||||

|

||||

1. **Text**: Text data includes sentences, paragraphs, documents, or any written content.

|

||||

2. **Images**: Image data encompasses photographs, illustrations, or any visual content.

|

||||

3. **Audio**: Audio data includes sounds, music, speech, or any auditory content.

|

||||

4. **Video**: Video data consists of moving images and sound, which can convey complex information.

|

||||

|

||||

Large datasets of multi-modal data (text, audio, images, etc.) can be converted into embeddings with the appropriate model.

|

||||

|

||||

!!! tip "LanceDB vs Other traditional Vector DBs"

|

||||

While many vector databases primarily focus on the storage and retrieval of vector embeddings, **LanceDB** uses **Lance file format** (operates on a disk-based architecture), which allows for the storage and management of not just embeddings but also **raw file data (bytes)**. This capability means that users can integrate various types of data, including images and text, alongside their vector embeddings in a unified system.

|

||||

|

||||

With the ability to store both vectors and associated file data, LanceDB enhances the querying process. Users can perform semantic searches that not only retrieve similar embeddings but also access related files and metadata, thus streamlining the workflow.

|

||||

|

||||

## How does embedding works?

|

||||

|

||||

As mentioned, after creating embedding, each data point is represented as a vector in a n-dimensional space (embedding space). The dimensionality of this space can vary depending on the complexity of the data and the specific embedding technique used.

|

||||

|

||||

Points that are close to each other in vector space are considered similar (or appear in similar contexts), and points that are far away are considered dissimilar. To quantify this closeness, we use distance as a metric which can be measured in the following way -

|

||||

|

||||

1. **Euclidean Distance (L2)**: It calculates the straight-line distance between two points (vectors) in a multidimensional space.

|

||||

2. **Cosine Similarity**: It measures the cosine of the angle between two vectors, providing a normalized measure of similarity based on their direction.

|

||||

3. **Dot product**: It is calculated as the sum of the products of their corresponding components. To measure relatedness it considers both the magnitude and direction of the vectors.

|

||||

|

||||

## How do you create and store vector embeddings for your data?

|

||||

|

||||

1. **Creating embeddings**: Choose an embedding model, it can be a pre-trained model (open-source or commercial) or you can train a custom embedding model for your scenario. Then feed your preprocessed data into the chosen model to obtain embeddings.

|

||||

|

||||

??? question "Popular choices for embedding models"

|

||||

For text data, popular choices are OpenAI’s text-embedding models, Google Gemini text-embedding models, Cohere’s Embed models, and SentenceTransformers, etc.

|

||||

|

||||

For image data, popular choices are CLIP (Contrastive Language–Image Pretraining), Imagebind embeddings by meta (supports audio, video, and image), and Jina multi-modal embeddings, etc.

|

||||

|

||||

2. **Storing vector embeddings**: This effectively requires **specialized databases** that can handle the complexity of vector data, as traditional databases often struggle with this task. Vector databases are designed specifically for storing and querying vector embeddings. They optimize for efficient nearest-neighbor searches and provide built-in indexing mechanisms.

|

||||

|

||||

!!! tip "Why LanceDB"

|

||||

LanceDB **automates** the entire process of creating and storing embeddings for your data. LanceDB allows you to define and use **embedding functions**, which can be **pre-trained models** or **custom models**.

|

||||

|

||||

This enables you to **generate** embeddings tailored to the nature of your data (e.g., text, images) and **store** both the **original data** and **embeddings** in a **structured schema** thus providing efficient querying capabilities for similarity searches.

|

||||

|

||||

Let's quickly [get started](./index.md) and learn how to manage embeddings in LanceDB.

|

||||

|

||||

## Bonus: As a developer, what you can create using embeddings?

|

||||

|

||||

As a developer, you can create a variety of innovative applications using vector embeddings. Check out the following -

|

||||

|

||||

<div class="grid cards" markdown>

|

||||

|

||||

- __Chatbots__

|

||||

|

||||

---

|

||||

|

||||

Develop chatbots that utilize embeddings to retrieve relevant context and generate coherent, contextually aware responses to user queries.

|

||||

|

||||

[:octicons-arrow-right-24: Check out examples](../examples/python_examples/chatbot.md)

|

||||

|

||||

- __Recommendation Systems__

|

||||

|

||||

---

|

||||

|

||||

Develop systems that recommend content (such as articles, movies, or products) based on the similarity of keywords and descriptions, enhancing user experience.

|

||||

|

||||

[:octicons-arrow-right-24: Check out examples](../examples/python_examples/recommendersystem.md)

|

||||

|

||||

- __Vector Search__

|

||||

|

||||

---

|

||||

|

||||

Build powerful applications that harness the full potential of semantic search, enabling them to retrieve relevant data quickly and effectively.

|

||||

|

||||

[:octicons-arrow-right-24: Check out examples](../examples/python_examples/vector_search.md)

|

||||

|

||||

- __RAG Applications__

|

||||

|

||||

---

|

||||

|

||||

Combine the strengths of large language models (LLMs) with retrieval-based approaches to create more useful applications.

|

||||

|

||||

[:octicons-arrow-right-24: Check out examples](../examples/python_examples/rag.md)

|

||||

|

||||

- __Many more examples__

|

||||

|

||||

---

|

||||

|

||||

Explore applied examples available as Colab notebooks or Python scripts to integrate into your applications.

|

||||

|

||||

[:octicons-arrow-right-24: More](../examples/examples_python.md)

|

||||

|

||||

</div>

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

LanceDB provides support for full-text search via Lance (before via [Tantivy](https://github.com/quickwit-oss/tantivy) (Python only)), allowing you to incorporate keyword-based search (based on BM25) in your retrieval solutions.

|

||||

|

||||

Currently, the Lance full text search is missing some features that are in the Tantivy full text search. This includes phrase queries, re-ranking, and customizing the tokenizer. Thus, in Python, Tantivy is still the default way to do full text search and many of the instructions below apply just to Tantivy-based indices.

|

||||

Currently, the Lance full text search is missing some features that are in the Tantivy full text search. This includes query parser and customizing the tokenizer. Thus, in Python, Tantivy is still the default way to do full text search and many of the instructions below apply just to Tantivy-based indices.

|

||||

|

||||

|

||||

## Installation (Only for Tantivy-based FTS)

|

||||

@@ -62,7 +62,7 @@ Consider that we have a LanceDB table named `my_table`, whose string column `tex

|

||||

});

|

||||

|

||||

await tbl

|

||||

.search("puppy")

|

||||

.search("puppy", queryType="fts")

|

||||

.select(["text"])

|

||||

.limit(10)

|

||||

.toArray();

|

||||

@@ -205,7 +205,7 @@ table.create_fts_index(["text_field"], use_tantivy=True, ordering_field_names=["

|

||||

## Phrase queries vs. terms queries

|

||||

|

||||

!!! warning "Warn"

|

||||

Phrase queries are available for only Tantivy-based FTS

|

||||

Lance-based FTS doesn't support queries using boolean operators `OR`, `AND`.

|

||||

|

||||

For full-text search you can specify either a **phrase** query like `"the old man and the sea"`,

|

||||

or a **terms** search query like `"(Old AND Man) AND Sea"`. For more details on the terms

|

||||

|

||||

383

docs/src/integrations/phidata.md

Normal file

383

docs/src/integrations/phidata.md

Normal file

@@ -0,0 +1,383 @@

|

||||

**phidata** is a framework for building **AI Assistants** with long-term memory, contextual knowledge, and the ability to take actions using function calling. It helps turn general-purpose LLMs into specialized assistants tailored to your use case by extending its capabilities using **memory**, **knowledge**, and **tools**.

|

||||

|

||||

- **Memory**: Stores chat history in a **database** and enables LLMs to have long-term conversations.

|

||||

- **Knowledge**: Stores information in a **vector database** and provides LLMs with business context. (Here we will use LanceDB)

|

||||

- **Tools**: Enable LLMs to take actions like pulling data from an **API**, **sending emails** or **querying a database**, etc.

|

||||

|

||||

|

||||

|

||||

Memory & knowledge make LLMs smarter while tools make them autonomous.

|

||||

|

||||

LanceDB is a vector database and its integration into phidata makes it easy for us to provide a **knowledge base** to LLMs. It enables us to store information as [embeddings](../embeddings/understanding_embeddings.md) and search for the **results** similar to ours using **query**.

|

||||

|

||||

??? Question "What is Knowledge Base?"

|

||||

Knowledge Base is a database of information that the Assistant can search to improve its responses. This information is stored in a vector database and provides LLMs with business context, which makes them respond in a context-aware manner.

|

||||

|

||||

While any type of storage can act as a knowledge base, vector databases offer the best solution for retrieving relevant results from dense information quickly.

|

||||

|

||||

Let's see how using LanceDB inside phidata helps in making LLM more useful:

|

||||

|

||||

## Prerequisites: install and import necessary dependencies

|

||||

|

||||

**Create a virtual environment**

|

||||

|

||||

1. install virtualenv package

|

||||

```python

|

||||

pip install virtualenv

|

||||

```

|

||||

2. Create a directory for your project and go to the directory and create a virtual environment inside it.

|

||||

```python

|

||||

mkdir phi

|

||||

```

|

||||

```python

|

||||

cd phi

|

||||

```

|

||||

```python

|

||||

python -m venv phidata_

|

||||

```

|

||||

|

||||

**Activating virtual environment**

|

||||

|

||||

1. from inside the project directory, run the following command to activate the virtual environment.

|

||||

```python

|

||||

phidata_/Scripts/activate

|

||||

```

|

||||

|

||||

**Install the following packages in the virtual environment**

|

||||

```python

|

||||

pip install lancedb phidata youtube_transcript_api openai ollama pandas numpy

|

||||

```

|

||||

|

||||

**Create python files and import necessary libraries**

|

||||

|

||||

You need to create two files - `transcript.py` and `ollama_assistant.py` or `openai_assistant.py`

|

||||

|

||||

=== "openai_assistant.py"

|

||||

|

||||

```python

|

||||

import os, openai

|

||||

from rich.prompt import Prompt

|

||||

from phi.assistant import Assistant

|

||||

from phi.knowledge.text import TextKnowledgeBase

|

||||

from phi.vectordb.lancedb import LanceDb

|

||||

from phi.llm.openai import OpenAIChat

|

||||

from phi.embedder.openai import OpenAIEmbedder

|

||||

from transcript import extract_transcript

|

||||

|

||||

if "OPENAI_API_KEY" not in os.environ:

|

||||

# OR set the key here as a variable

|

||||

openai.api_key = "sk-..."

|

||||

|

||||

# The code below creates a file "transcript.txt" in the directory, the txt file will be used below

|

||||

youtube_url = "https://www.youtube.com/watch?v=Xs33-Gzl8Mo"

|

||||

segment_duration = 20

|

||||

transcript_text,dict_transcript = extract_transcript(youtube_url,segment_duration)

|

||||

```

|

||||

|

||||

=== "ollama_assistant.py"

|

||||

|

||||

```python

|

||||

from rich.prompt import Prompt

|

||||

from phi.assistant import Assistant

|

||||

from phi.knowledge.text import TextKnowledgeBase

|

||||

from phi.vectordb.lancedb import LanceDb

|

||||

from phi.llm.ollama import Ollama

|

||||

from phi.embedder.ollama import OllamaEmbedder

|

||||

from transcript import extract_transcript

|

||||

|

||||

# The code below creates a file "transcript.txt" in the directory, the txt file will be used below

|

||||

youtube_url = "https://www.youtube.com/watch?v=Xs33-Gzl8Mo"

|

||||

segment_duration = 20

|

||||

transcript_text,dict_transcript = extract_transcript(youtube_url,segment_duration)

|

||||

```

|

||||

|

||||

=== "transcript.py"

|

||||

|

||||

``` python

|

||||

from youtube_transcript_api import YouTubeTranscriptApi

|

||||

import re

|

||||

|

||||

def smodify(seconds):

|

||||

hours, remainder = divmod(seconds, 3600)

|

||||

minutes, seconds = divmod(remainder, 60)

|

||||

return f"{int(hours):02}:{int(minutes):02}:{int(seconds):02}"

|

||||

|

||||

def extract_transcript(youtube_url,segment_duration):

|

||||

# Extract video ID from the URL

|

||||

video_id = re.search(r'(?<=v=)[\w-]+', youtube_url)

|

||||

if not video_id:

|

||||

video_id = re.search(r'(?<=be/)[\w-]+', youtube_url)

|

||||

if not video_id:

|

||||

return None

|

||||

|

||||

video_id = video_id.group(0)

|

||||

|

||||

# Attempt to fetch the transcript

|

||||

try:

|

||||

# Try to get the official transcript

|

||||

transcript = YouTubeTranscriptApi.get_transcript(video_id, languages=['en'])

|

||||

except Exception:

|

||||

# If no official transcript is found, try to get auto-generated transcript

|

||||

try:

|

||||

transcript_list = YouTubeTranscriptApi.list_transcripts(video_id)

|

||||

for transcript in transcript_list:

|

||||

transcript = transcript.translate('en').fetch()

|

||||

except Exception:

|

||||

return None

|

||||

|

||||

# Format the transcript into 120s chunks

|

||||

transcript_text,dict_transcript = format_transcript(transcript,segment_duration)

|

||||

# Open the file in write mode, which creates it if it doesn't exist

|

||||

with open("transcript.txt", "w",encoding="utf-8") as file:

|

||||

file.write(transcript_text)

|

||||

return transcript_text,dict_transcript

|

||||

|

||||

def format_transcript(transcript,segment_duration):

|

||||

chunked_transcript = []

|

||||

chunk_dict = []

|

||||

current_chunk = []

|

||||

current_time = 0

|

||||

# 2 minutes in seconds

|

||||

start_time_chunk = 0 # To track the start time of the current chunk

|

||||

|

||||

for segment in transcript:

|

||||

start_time = segment['start']

|

||||

end_time_x = start_time + segment['duration']

|

||||

text = segment['text']

|

||||

|

||||

# Add text to the current chunk

|

||||

current_chunk.append(text)

|

||||

|

||||

# Update the current time with the duration of the current segment

|

||||

# The duration of the current segment is given by segment['start'] - start_time_chunk

|

||||

if current_chunk:

|

||||

current_time = start_time - start_time_chunk

|

||||

|

||||

# If current chunk duration reaches or exceeds 2 minutes, save the chunk

|

||||

if current_time >= segment_duration:

|

||||

# Use the start time of the first segment in the current chunk as the timestamp

|

||||

chunked_transcript.append(f"[{smodify(start_time_chunk)} to {smodify(end_time_x)}] " + " ".join(current_chunk))

|

||||

current_chunk = re.sub(r'[\xa0\n]', lambda x: '' if x.group() == '\xa0' else ' ', "\n".join(current_chunk))

|

||||

chunk_dict.append({"timestamp":f"[{smodify(start_time_chunk)} to {smodify(end_time_x)}]", "text": "".join(current_chunk)})

|

||||

current_chunk = [] # Reset the chunk

|

||||

start_time_chunk = start_time + segment['duration'] # Update the start time for the next chunk

|

||||

current_time = 0 # Reset current time

|

||||

|

||||

# Add any remaining text in the last chunk

|

||||

if current_chunk:

|

||||

chunked_transcript.append(f"[{smodify(start_time_chunk)} to {smodify(end_time_x)}] " + " ".join(current_chunk))

|

||||

current_chunk = re.sub(r'[\xa0\n]', lambda x: '' if x.group() == '\xa0' else ' ', "\n".join(current_chunk))

|

||||

chunk_dict.append({"timestamp":f"[{smodify(start_time_chunk)} to {smodify(end_time_x)}]", "text": "".join(current_chunk)})

|

||||

|

||||

return "\n\n".join(chunked_transcript), chunk_dict

|

||||

```

|

||||

|

||||

!!! warning

|

||||

If creating Ollama assistant, download and install Ollama [from here](https://ollama.com/) and then run the Ollama instance in the background. Also, download the required models using `ollama pull <model-name>`. Check out the models [here](https://ollama.com/library)

|

||||

|

||||

|

||||

**Run the following command to deactivate the virtual environment if needed**

|

||||

```python

|

||||

deactivate

|

||||

```

|

||||

|

||||

## **Step 1** - Create a Knowledge Base for AI Assistant using LanceDB

|

||||

|

||||

=== "openai_assistant.py"

|

||||

|

||||

```python

|

||||

# Create knowledge Base with OpenAIEmbedder in LanceDB

|

||||

knowledge_base = TextKnowledgeBase(

|

||||

path="transcript.txt",

|

||||

vector_db=LanceDb(

|

||||

embedder=OpenAIEmbedder(api_key = openai.api_key),

|

||||

table_name="transcript_documents",

|

||||

uri="./t3mp/.lancedb",

|

||||

),

|

||||

num_documents = 10

|

||||

)

|

||||

```

|

||||

|

||||

=== "ollama_assistant.py"

|

||||

|

||||

```python

|

||||

# Create knowledge Base with OllamaEmbedder in LanceDB

|

||||

knowledge_base = TextKnowledgeBase(

|

||||

path="transcript.txt",

|

||||

vector_db=LanceDb(

|

||||

embedder=OllamaEmbedder(model="nomic-embed-text",dimensions=768),

|

||||

table_name="transcript_documents",

|

||||

uri="./t2mp/.lancedb",

|

||||

),

|

||||

num_documents = 10

|

||||

)

|

||||

```

|

||||

Check out the list of **embedders** supported by **phidata** and their usage [here](https://docs.phidata.com/embedder/introduction).

|

||||

|

||||

Here we have used `TextKnowledgeBase`, which loads text/docx files to the knowledge base.

|

||||

|

||||

Let's see all the parameters that `TextKnowledgeBase` takes -

|

||||

|

||||

| Name| Type | Purpose | Default |

|

||||

|:----|:-----|:--------|:--------|

|

||||

|`path`|`Union[str, Path]`| Path to text file(s). It can point to a single text file or a directory of text files.| provided by user |

|

||||

|`formats`|`List[str]`| File formats accepted by this knowledge base. |`[".txt"]`|

|

||||

|`vector_db`|`VectorDb`| Vector Database for the Knowledge Base. phidata provides a wrapper around many vector DBs, you can import it like this - `from phi.vectordb.lancedb import LanceDb` | provided by user |

|

||||

|`num_documents`|`int`| Number of results (documents/vectors) that vector search should return. |`5`|

|

||||

|`reader`|`TextReader`| phidata provides many types of reader objects which read data, clean it and create chunks of data, encapsulate each chunk inside an object of the `Document` class, and return **`List[Document]`**. | `TextReader()` |

|

||||

|`optimize_on`|`int`| It is used to specify the number of documents on which to optimize the vector database. Supposed to create an index. |`1000`|

|

||||

|

||||

??? Tip "Wonder! What is `Document` class?"

|

||||

We know that, before storing the data in vectorDB, we need to split the data into smaller chunks upon which embeddings will be created and these embeddings along with the chunks will be stored in vectorDB. When the user queries over the vectorDB, some of these embeddings will be returned as the result based on the semantic similarity with the query.

|

||||

|

||||

When the user queries over vectorDB, the queries are converted into embeddings, and a nearest neighbor search is performed over these query embeddings which returns the embeddings that correspond to most semantically similar chunks(parts of our data) present in vectorDB.

|

||||

|

||||

Here, a “Document” is a class in phidata. Since there is an option to let phidata create and manage embeddings, it splits our data into smaller chunks(as expected). It does not directly create embeddings on it. Instead, it takes each chunk and encapsulates it inside the object of the `Document` class along with various other metadata related to the chunk. Then embeddings are created on these `Document` objects and stored in vectorDB.

|

||||

|

||||

```python

|

||||

class Document(BaseModel):

|

||||

"""Model for managing a document"""

|

||||

|

||||

content: str # <--- here data of chunk is stored

|

||||

id: Optional[str] = None

|

||||

name: Optional[str] = None

|

||||

meta_data: Dict[str, Any] = {}

|

||||

embedder: Optional[Embedder] = None

|

||||

embedding: Optional[List[float]] = None

|

||||

usage: Optional[Dict[str, Any]] = None

|

||||

```

|

||||

|

||||

However, using phidata you can load many other types of data in the knowledge base(other than text). Check out [phidata Knowledge Base](https://docs.phidata.com/knowledge/introduction) for more information.

|

||||

|

||||

Let's dig deeper into the `vector_db` parameter and see what parameters `LanceDb` takes -

|

||||

|

||||

| Name| Type | Purpose | Default |

|

||||

|:----|:-----|:--------|:--------|

|

||||

|`embedder`|`Embedder`| phidata provides many Embedders that abstract the interaction with embedding APIs and utilize it to generate embeddings. Check out other embedders [here](https://docs.phidata.com/embedder/introduction) | `OpenAIEmbedder` |

|

||||

|`distance`|`List[str]`| The choice of distance metric used to calculate the similarity between vectors, which directly impacts search results and performance in vector databases. |`Distance.cosine`|

|

||||

|`connection`|`lancedb.db.LanceTable`| LanceTable can be accessed through `.connection`. You can connect to an existing table of LanceDB, created outside of phidata, and utilize it. If not provided, it creates a new table using `table_name` parameter and adds it to `connection`. |`None`|

|

||||

|`uri`|`str`| It specifies the directory location of **LanceDB database** and establishes a connection that can be used to interact with the database. | `"/tmp/lancedb"` |

|

||||

|`table_name`|`str`| If `connection` is not provided, it initializes and connects to a new **LanceDB table** with a specified(or default) name in the database present at `uri`. |`"phi"`|

|

||||

|`nprobes`|`int`| It refers to the number of partitions that the search algorithm examines to find the nearest neighbors of a given query vector. Higher values will yield better recall (more likely to find vectors if they exist) at the expense of latency. |`20`|

|

||||

|

||||

|

||||

!!! note

|

||||

Since we just initialized the KnowledgeBase. The VectorDB table that corresponds to this Knowledge Base is not yet populated with our data. It will be populated in **Step 3**, once we perform the `load` operation.

|

||||

|

||||

You can check the state of the LanceDB table using - `knowledge_base.vector_db.connection.to_pandas()`

|

||||

|

||||

Now that the Knowledge Base is initialized, , we can go to **step 2**.

|

||||

|

||||

## **Step 2** - Create an assistant with our choice of LLM and reference to the knowledge base.

|

||||

|

||||

|

||||

=== "openai_assistant.py"

|

||||

|

||||

```python

|

||||

# define an assistant with gpt-4o-mini llm and reference to the knowledge base created above

|

||||

assistant = Assistant(

|

||||

llm=OpenAIChat(model="gpt-4o-mini", max_tokens=1000, temperature=0.3,api_key = openai.api_key),

|

||||

description="""You are an Expert in explaining youtube video transcripts. You are a bot that takes transcript of a video and answer the question based on it.

|

||||

|

||||

This is transcript for the above timestamp: {relevant_document}

|

||||

The user input is: {user_input}

|

||||

generate highlights only when asked.

|

||||

When asked to generate highlights from the video, understand the context for each timestamp and create key highlight points, answer in following way -

|

||||

[timestamp] - highlight 1

|

||||

[timestamp] - highlight 2

|

||||

... so on

|

||||

|

||||

Your task is to understand the user question, and provide an answer using the provided contexts. Your answers are correct, high-quality, and written by an domain expert. If the provided context does not contain the answer, simply state,'The provided context does not have the answer.'""",

|

||||

knowledge_base=knowledge_base,

|

||||

add_references_to_prompt=True,

|

||||

)

|

||||

```

|

||||

|

||||

=== "ollama_assistant.py"

|

||||

|

||||

```python

|

||||

# define an assistant with llama3.1 llm and reference to the knowledge base created above

|

||||

assistant = Assistant(

|

||||

llm=Ollama(model="llama3.1"),

|

||||

description="""You are an Expert in explaining youtube video transcripts. You are a bot that takes transcript of a video and answer the question based on it.

|

||||

|

||||

This is transcript for the above timestamp: {relevant_document}

|

||||

The user input is: {user_input}

|

||||

generate highlights only when asked.

|

||||

When asked to generate highlights from the video, understand the context for each timestamp and create key highlight points, answer in following way -

|

||||

[timestamp] - highlight 1

|

||||

[timestamp] - highlight 2

|

||||

... so on

|

||||

|

||||

Your task is to understand the user question, and provide an answer using the provided contexts. Your answers are correct, high-quality, and written by an domain expert. If the provided context does not contain the answer, simply state,'The provided context does not have the answer.'""",

|

||||

knowledge_base=knowledge_base,

|

||||

add_references_to_prompt=True,

|

||||

)

|

||||

```

|

||||

|

||||

Assistants add **memory**, **knowledge**, and **tools** to LLMs. Here we will add only **knowledge** in this example.

|

||||

|

||||

Whenever we will give a query to LLM, the assistant will retrieve relevant information from our **Knowledge Base**(table in LanceDB) and pass it to LLM along with the user query in a structured way.

|

||||

|

||||

- The `add_references_to_prompt=True` always adds information from the knowledge base to the prompt, regardless of whether it is relevant to the question.

|

||||

|

||||

To know more about an creating assistant in phidata, check out [phidata docs](https://docs.phidata.com/assistants/introduction) here.

|

||||

|

||||

## **Step 3** - Load data to Knowledge Base.

|

||||

|

||||

```python

|

||||

# load out data into the knowledge_base (populating the LanceTable)

|

||||

assistant.knowledge_base.load(recreate=False)

|

||||

```

|

||||

The above code loads the data to the Knowledge Base(LanceDB Table) and now it is ready to be used by the assistant.

|

||||

|

||||

| Name| Type | Purpose | Default |

|

||||

|:----|:-----|:--------|:--------|

|

||||

|`recreate`|`bool`| If True, it drops the existing table and recreates the table in the vectorDB. |`False`|

|

||||

|`upsert`|`bool`| If True and the vectorDB supports upsert, it will upsert documents to the vector db. | `False` |

|

||||

|`skip_existing`|`bool`| If True, skips documents that already exist in the vectorDB when inserting. |`True`|

|

||||

|

||||

??? tip "What is upsert?"

|

||||

Upsert is a database operation that combines "update" and "insert". It updates existing records if a document with the same identifier does exist, or inserts new records if no matching record exists. This is useful for maintaining the most current information without manually checking for existence.

|

||||

|

||||

During the Load operation, phidata directly interacts with the LanceDB library and performs the loading of the table with our data in the following steps -

|

||||

|

||||

1. **Creates** and **initializes** the table if it does not exist.

|

||||

|

||||

2. Then it **splits** our data into smaller **chunks**.

|

||||

|

||||

??? question "How do they create chunks?"

|

||||

**phidata** provides many types of **Knowledge Bases** based on the type of data. Most of them :material-information-outline:{ title="except LlamaIndexKnowledgeBase and LangChainKnowledgeBase"} has a property method called `document_lists` of type `Iterator[List[Document]]`. During the load operation, this property method is invoked. It traverses on the data provided by us (in this case, a text file(s)) using `reader`. Then it **reads**, **creates chunks**, and **encapsulates** each chunk inside a `Document` object and yields **lists of `Document` objects** that contain our data.

|

||||

|

||||

3. Then **embeddings** are created on these chunks are **inserted** into the LanceDB Table

|

||||

|

||||

??? question "How do they insert your data as different rows in LanceDB Table?"

|

||||

The chunks of your data are in the form - **lists of `Document` objects**. It was yielded in the step above.

|

||||

|

||||

for each `Document` in `List[Document]`, it does the following operations:

|

||||

|

||||

- Creates embedding on `Document`.

|

||||

- Cleans the **content attribute**(chunks of our data is here) of `Document`.

|

||||

- Prepares data by creating `id` and loading `payload` with the metadata related to this chunk. (1)

|

||||

{ .annotate }

|

||||

|

||||

1. Three columns will be added to the table - `"id"`, `"vector"`, and `"payload"` (payload contains various metadata including **`content`**)

|

||||

|

||||

- Then add this data to LanceTable.

|

||||

|

||||

4. Now the internal state of `knowledge_base` is changed (embeddings are created and loaded in the table ) and it **ready to be used by assistant**.

|

||||

|

||||

## **Step 4** - Start a cli chatbot with access to the Knowledge base

|

||||

|

||||

```python

|

||||

# start cli chatbot with knowledge base

|

||||

assistant.print_response("Ask me about something from the knowledge base")

|

||||

while True:

|

||||

message = Prompt.ask(f"[bold] :sunglasses: User [/bold]")

|

||||

if message in ("exit", "bye"):

|

||||

break

|

||||

assistant.print_response(message, markdown=True)

|

||||

```

|

||||

|

||||

|

||||

For more information and amazing cookbooks of phidata, read the [phidata documentation](https://docs.phidata.com/introduction) and also visit [LanceDB x phidata docmentation](https://docs.phidata.com/vectordb/lancedb).

|

||||

@@ -68,3 +68,25 @@ currently is also a memory intensive operation.

|

||||

#### Returns

|

||||

|

||||

[`Index`](Index.md)

|

||||

|

||||

### fts()

|

||||

|

||||

> `static` **fts**(`options`?): [`Index`](Index.md)

|

||||

|

||||

Create a full text search index

|

||||

|

||||

This index is used to search for text data. The index is created by tokenizing the text

|

||||

into words and then storing occurrences of these words in a data structure called inverted index

|

||||

that allows for fast search.

|

||||

|

||||

During a search the query is tokenized and the inverted index is used to find the rows that

|

||||

contain the query words. The rows are then scored based on BM25 and the top scoring rows are

|

||||

sorted and returned.

|

||||

|

||||

#### Parameters

|

||||

|

||||

• **options?**: `Partial`<[`FtsOptions`](../interfaces/FtsOptions.md)>

|

||||

|

||||

#### Returns

|

||||

|

||||

[`Index`](Index.md)

|

||||

|

||||

@@ -501,16 +501,28 @@ Get the schema of the table.

|

||||

|

||||

#### search(query)

|

||||

|

||||

> `abstract` **search**(`query`): [`VectorQuery`](VectorQuery.md)

|

||||

> `abstract` **search**(`query`, `queryType`, `ftsColumns`): [`VectorQuery`](VectorQuery.md)

|

||||

|

||||

Create a search query to find the nearest neighbors

|

||||

of the given query vector

|

||||

of the given query vector, or the documents

|

||||

with the highest relevance to the query string.

|

||||

|

||||

##### Parameters

|

||||

|

||||

• **query**: `string`

|

||||

|

||||

the query. This will be converted to a vector using the table's provided embedding function

|

||||

the query. This will be converted to a vector using the table's provided embedding function,

|

||||

or the query string for full-text search if `queryType` is "fts".

|

||||

|

||||

• **queryType**: `string` = `"auto"` \| `"fts"`

|

||||

|

||||

the type of query to run. If "auto", the query type will be determined based on the query.

|

||||

|

||||

• **ftsColumns**: `string[] | str` = undefined

|

||||

|

||||

the columns to search in. If not provided, all indexed columns will be searched.

|

||||

|

||||

For now, this can support to search only one column.

|

||||

|

||||

##### Returns

|

||||

|

||||

|

||||

@@ -37,6 +37,7 @@

|

||||

- [IndexOptions](interfaces/IndexOptions.md)

|

||||

- [IndexStatistics](interfaces/IndexStatistics.md)

|

||||

- [IvfPqOptions](interfaces/IvfPqOptions.md)

|

||||

- [FtsOptions](interfaces/FtsOptions.md)

|

||||

- [TableNamesOptions](interfaces/TableNamesOptions.md)

|

||||

- [UpdateOptions](interfaces/UpdateOptions.md)

|

||||

- [WriteOptions](interfaces/WriteOptions.md)

|

||||

|

||||

51

docs/src/rag/adaptive_rag.md

Normal file

51

docs/src/rag/adaptive_rag.md

Normal file

@@ -0,0 +1,51 @@

|

||||

**Adaptive RAG 🤹♂️**

|

||||

====================================================================

|

||||

Adaptive RAG introduces a RAG technique that combines query analysis with self-corrective RAG.

|

||||

|

||||

For Query Analysis, it uses a small classifier(LLM), to decide the query’s complexity. Query Analysis helps routing smoothly to adjust between different retrieval strategies No retrieval, Single-shot RAG or Iterative RAG.

|

||||

|

||||

**[Official Paper](https://arxiv.org/pdf/2403.14403)**

|

||||

|

||||

<figure markdown="span">

|

||||

|

||||

<figcaption>Adaptive-RAG: <a href="https://github.com/starsuzi/Adaptive-RAG">Source</a>

|

||||

</figcaption>

|

||||

</figure>

|

||||

|

||||

**[Offical Implementation](https://github.com/starsuzi/Adaptive-RAG)**

|

||||

|

||||

Here’s a code snippet for query analysis

|

||||

|

||||

```python

|

||||

from langchain_core.prompts import ChatPromptTemplate

|

||||

from langchain_core.pydantic_v1 import BaseModel, Field

|

||||

from langchain_openai import ChatOpenAI

|

||||

|

||||

class RouteQuery(BaseModel):

|

||||

"""Route a user query to the most relevant datasource."""

|

||||

|

||||

datasource: Literal["vectorstore", "web_search"] = Field(

|

||||

...,

|

||||

description="Given a user question choose to route it to web search or a vectorstore.",

|

||||

)

|

||||

|

||||

|

||||

# LLM with function call

|

||||

llm = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

|

||||

structured_llm_router = llm.with_structured_output(RouteQuery)

|

||||

```

|

||||

|

||||

For defining and querying retriever

|

||||

|

||||

```python

|

||||

# add documents in LanceDB

|

||||

vectorstore = LanceDB.from_documents(

|

||||

documents=doc_splits,

|

||||

embedding=OpenAIEmbeddings(),

|

||||

)

|

||||

retriever = vectorstore.as_retriever()

|

||||

|

||||

# query using defined retriever

|

||||

question = "How adaptive RAG works"

|

||||

docs = retriever.get_relevant_documents(question)

|

||||

```

|

||||

38

docs/src/rag/advanced_techniques/flare.md

Normal file

38

docs/src/rag/advanced_techniques/flare.md

Normal file

@@ -0,0 +1,38 @@

|

||||

**FLARE 💥**

|

||||

====================================================================

|

||||

FLARE, stands for Forward-Looking Active REtrieval augmented generation is a generic retrieval-augmented generation method that actively decides when and what to retrieve using a prediction of the upcoming sentence to anticipate future content and utilize it as the query to retrieve relevant documents if it contains low-confidence tokens.

|

||||

|

||||

**[Official Paper](https://arxiv.org/abs/2305.06983)**

|

||||

|

||||

<figure markdown="span">

|

||||

|

||||

<figcaption>FLARE: <a href="https://github.com/jzbjyb/FLARE">Source</a></figcaption>

|

||||

</figure>

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/examples/better-rag-FLAIR/main.ipynb)

|

||||

|

||||

Here’s a code snippet for using FLARE with Langchain

|

||||

|

||||

```python

|

||||

from langchain.vectorstores import LanceDB

|

||||

from langchain.document_loaders import ArxivLoader

|

||||

from langchain.chains import FlareChain

|

||||

from langchain.prompts import PromptTemplate

|

||||

from langchain.chains import LLMChain

|

||||

from langchain.llms import OpenAI

|

||||

|

||||

llm = OpenAI()

|

||||

|

||||

# load dataset

|

||||

|

||||

# LanceDB retriever

|

||||

vector_store = LanceDB.from_documents(doc_chunks, embeddings, connection=table)

|

||||

retriever = vector_store.as_retriever()

|

||||

|

||||

# define flare chain

|

||||

flare = FlareChain.from_llm(llm=llm,retriever=vector_store_retriever,max_generation_len=300,min_prob=0.45)

|

||||

|

||||

result = flare.run(input_text)

|

||||

```

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/examples/better-rag-FLAIR/main.ipynb)

|

||||

55

docs/src/rag/advanced_techniques/hyde.md

Normal file

55

docs/src/rag/advanced_techniques/hyde.md

Normal file

@@ -0,0 +1,55 @@

|

||||

**HyDE: Hypothetical Document Embeddings 🤹♂️**

|

||||

====================================================================

|

||||

HyDE, stands for Hypothetical Document Embeddings is an approach used for precise zero-shot dense retrieval without relevance labels. It focuses on augmenting and improving similarity searches, often intertwined with vector stores in information retrieval. The method generates a hypothetical document for an incoming query, which is then embedded and used to look up real documents that are similar to the hypothetical document.

|

||||

|

||||

**[Official Paper](https://arxiv.org/pdf/2212.10496)**

|

||||

|

||||

<figure markdown="span">

|

||||

|

||||

<figcaption>HyDE: <a href="https://arxiv.org/pdf/2212.10496">Source</a></figcaption>

|

||||

</figure>

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/examples/Advance-RAG-with-HyDE/main.ipynb)

|

||||

|

||||

Here’s a code snippet for using HyDE with Langchain

|

||||

|

||||

```python

|

||||

from langchain.llms import OpenAI

|

||||

from langchain.embeddings import OpenAIEmbeddings

|

||||

from langchain.prompts import PromptTemplate

|

||||

from langchain.chains import LLMChain, HypotheticalDocumentEmbedder

|

||||

from langchain.vectorstores import LanceDB

|

||||

|

||||

# set OPENAI_API_KEY as env variable before this step

|

||||

# initialize LLM and embedding function

|

||||

llm = OpenAI()

|

||||

emebeddings = OpenAIEmbeddings()

|

||||

|

||||

# HyDE embedding

|

||||

embeddings = HypotheticalDocumentEmbedder(llm_chain=llm_chain,base_embeddings=embeddings)

|

||||

|

||||

# load dataset

|

||||

|

||||

# LanceDB retriever

|

||||

retriever = LanceDB.from_documents(documents, embeddings, connection=table)

|

||||

|

||||

# prompt template

|

||||

prompt_template = """

|

||||

As a knowledgeable and helpful research assistant, your task is to provide informative answers based on the given context. Use your extensive knowledge base to offer clear, concise, and accurate responses to the user's inquiries.

|

||||

if quetion is not related to documents simply say you dont know

|

||||

Question: {question}

|

||||

|

||||

Answer:

|

||||

"""

|

||||

|

||||

prompt = PromptTemplate(input_variables=["question"], template=prompt_template)

|

||||

|

||||

# LLM Chain

|

||||

llm_chain = LLMChain(llm=llm, prompt=prompt)

|

||||

|

||||

# vector search

|

||||

retriever.similarity_search(query)

|

||||

llm_chain.run(query)

|

||||

```

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/examples/Advance-RAG-with-HyDE/main.ipynb)

|

||||

101

docs/src/rag/agentic_rag.md

Normal file

101

docs/src/rag/agentic_rag.md

Normal file

@@ -0,0 +1,101 @@

|

||||

**Agentic RAG 🤖**

|

||||

====================================================================

|

||||

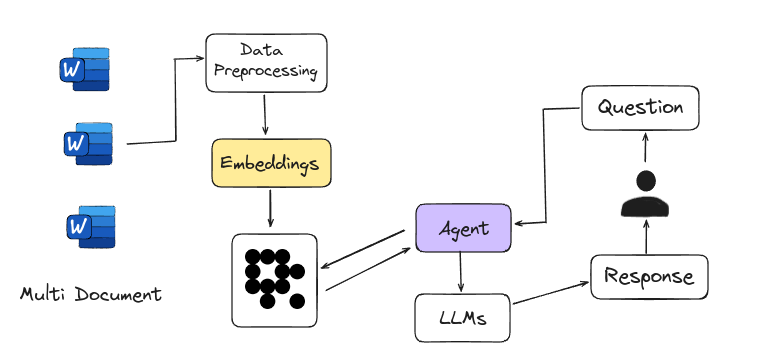

Agentic RAG is Agent-based RAG introduces an advanced framework for answering questions by using intelligent agents instead of just relying on large language models. These agents act like expert researchers, handling complex tasks such as detailed planning, multi-step reasoning, and using external tools. They navigate multiple documents, compare information, and generate accurate answers. This system is easily scalable, with each new document set managed by a sub-agent, making it a powerful tool for tackling a wide range of information needs.

|

||||

|

||||

<figure markdown="span">

|

||||

|

||||

<figcaption>Agent-based RAG</figcaption>

|

||||

</figure>

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/tutorials/Agentic_RAG/main.ipynb)

|

||||

|

||||

Here’s a code snippet for defining retriever using Langchain

|

||||

|

||||

```python

|

||||

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

||||

from langchain_community.document_loaders import WebBaseLoader

|

||||

from langchain_community.vectorstores import LanceDB

|

||||

from langchain_openai import OpenAIEmbeddings

|

||||

|

||||

urls = [

|

||||

"https://content.dgft.gov.in/Website/CIEP.pdf",

|

||||

"https://content.dgft.gov.in/Website/GAE.pdf",

|

||||

"https://content.dgft.gov.in/Website/HTE.pdf",

|

||||

]

|

||||

|

||||

|

||||

docs = [WebBaseLoader(url).load() for url in urls]

|

||||

docs_list = [item for sublist in docs for item in sublist]

|

||||

|

||||

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

|

||||

chunk_size=100, chunk_overlap=50

|

||||

)

|

||||

doc_splits = text_splitter.split_documents(docs_list)

|

||||

|

||||

# add documents in LanceDB

|

||||

vectorstore = LanceDB.from_documents(

|

||||

documents=doc_splits,

|

||||

embedding=OpenAIEmbeddings(),

|

||||

)

|

||||

retriever = vectorstore.as_retriever()

|

||||

|

||||

```

|

||||

|

||||

Agent that formulates an improved query for better retrieval results and then grades the retrieved documents

|

||||

|

||||

```python

|

||||

def grade_documents(state) -> Literal["generate", "rewrite"]:

|

||||

class grade(BaseModel):

|

||||

binary_score: str = Field(description="Relevance score 'yes' or 'no'")

|

||||

|

||||

model = ChatOpenAI(temperature=0, model="gpt-4-0125-preview", streaming=True)

|

||||

llm_with_tool = model.with_structured_output(grade)

|

||||

prompt = PromptTemplate(

|

||||

template="""You are a grader assessing relevance of a retrieved document to a user question. \n

|

||||

Here is the retrieved document: \n\n {context} \n\n

|

||||

Here is the user question: {question} \n

|

||||

If the document contains keyword(s) or semantic meaning related to the user question, grade it as relevant. \n

|

||||

Give a binary score 'yes' or 'no' score to indicate whether the document is relevant to the question.""",

|

||||

input_variables=["context", "question"],

|

||||

)

|

||||

chain = prompt | llm_with_tool

|

||||

|

||||

messages = state["messages"]

|

||||

last_message = messages[-1]

|

||||

question = messages[0].content

|

||||

docs = last_message.content

|

||||

|

||||

scored_result = chain.invoke({"question": question, "context": docs})

|

||||

score = scored_result.binary_score

|

||||

|

||||

return "generate" if score == "yes" else "rewrite"

|

||||

|

||||

|

||||

def agent(state):

|

||||

messages = state["messages"]

|

||||

model = ChatOpenAI(temperature=0, streaming=True, model="gpt-4-turbo")

|

||||

model = model.bind_tools(tools)

|

||||

response = model.invoke(messages)

|

||||

return {"messages": [response]}

|

||||

|

||||

|

||||

def rewrite(state):

|

||||

messages = state["messages"]

|

||||

question = messages[0].content

|

||||

msg = [

|

||||

HumanMessage(

|

||||

content=f""" \n

|

||||

Look at the input and try to reason about the underlying semantic intent / meaning. \n

|

||||

Here is the initial question:

|

||||

\n ------- \n

|

||||

{question}

|

||||

\n ------- \n

|

||||

Formulate an improved question: """,

|

||||

)

|

||||

]

|

||||

model = ChatOpenAI(temperature=0, model="gpt-4-0125-preview", streaming=True)

|

||||

response = model.invoke(msg)

|

||||

return {"messages": [response]}

|

||||

```

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/tutorials/Agentic_RAG/main.ipynb)

|

||||

120

docs/src/rag/corrective_rag.md

Normal file

120

docs/src/rag/corrective_rag.md

Normal file

@@ -0,0 +1,120 @@

|

||||

**Corrective RAG ✅**

|

||||

====================================================================

|

||||

|

||||

Corrective-RAG (CRAG) is a strategy for Retrieval-Augmented Generation (RAG) that includes self-reflection and self-grading of retrieved documents. Here’s a simplified breakdown of the steps involved:

|

||||

|

||||

1. **Relevance Check**: If at least one document meets the relevance threshold, the process moves forward to the generation phase.

|

||||

2. **Knowledge Refinement**: Before generating an answer, the process refines the knowledge by dividing the document into smaller segments called "knowledge strips."

|

||||

3. **Grading and Filtering**: Each "knowledge strip" is graded, and irrelevant ones are filtered out.

|

||||

4. **Additional Data Source**: If all documents are below the relevance threshold, or if the system is unsure about their relevance, it will seek additional information by performing a web search to supplement the retrieved data.

|

||||

|

||||

Above steps are mentioned in

|

||||

**[Official Paper](https://arxiv.org/abs/2401.15884)**

|

||||

|

||||

<figure markdown="span">

|

||||

|

||||

<figcaption>Corrective RAG: <a href="https://github.com/HuskyInSalt/CRAG">Source</a>

|

||||

</figcaption>

|

||||

</figure>

|

||||

|

||||

Corrective Retrieval-Augmented Generation (CRAG) is a method that works like a **built-in fact-checker**.

|

||||

|

||||

**[Offical Implementation](https://github.com/HuskyInSalt/CRAG)**

|

||||

|

||||

[](https://colab.research.google.com/github/lancedb/vectordb-recipes/blob/main/tutorials/Corrective-RAG-with_Langgraph/CRAG_with_Langgraph.ipynb)

|

||||

|

||||

Here’s a code snippet for defining a table with the [Embedding API](https://lancedb.github.io/lancedb/embeddings/embedding_functions/), and retrieves the relevant documents.

|

||||

|

||||

```python

|

||||

import pandas as pd

|

||||

import lancedb

|

||||

from lancedb.pydantic import LanceModel, Vector

|

||||

from lancedb.embeddings import get_registry

|

||||

|

||||

db = lancedb.connect("/tmp/db")

|

||||

model = get_registry().get("sentence-transformers").create(name="BAAI/bge-small-en-v1.5", device="cpu")

|

||||

|

||||

class Docs(LanceModel):

|

||||

text: str = model.SourceField()

|

||||

vector: Vector(model.ndims()) = model.VectorField()

|

||||

|

||||

table = db.create_table("docs", schema=Docs)

|

||||

|